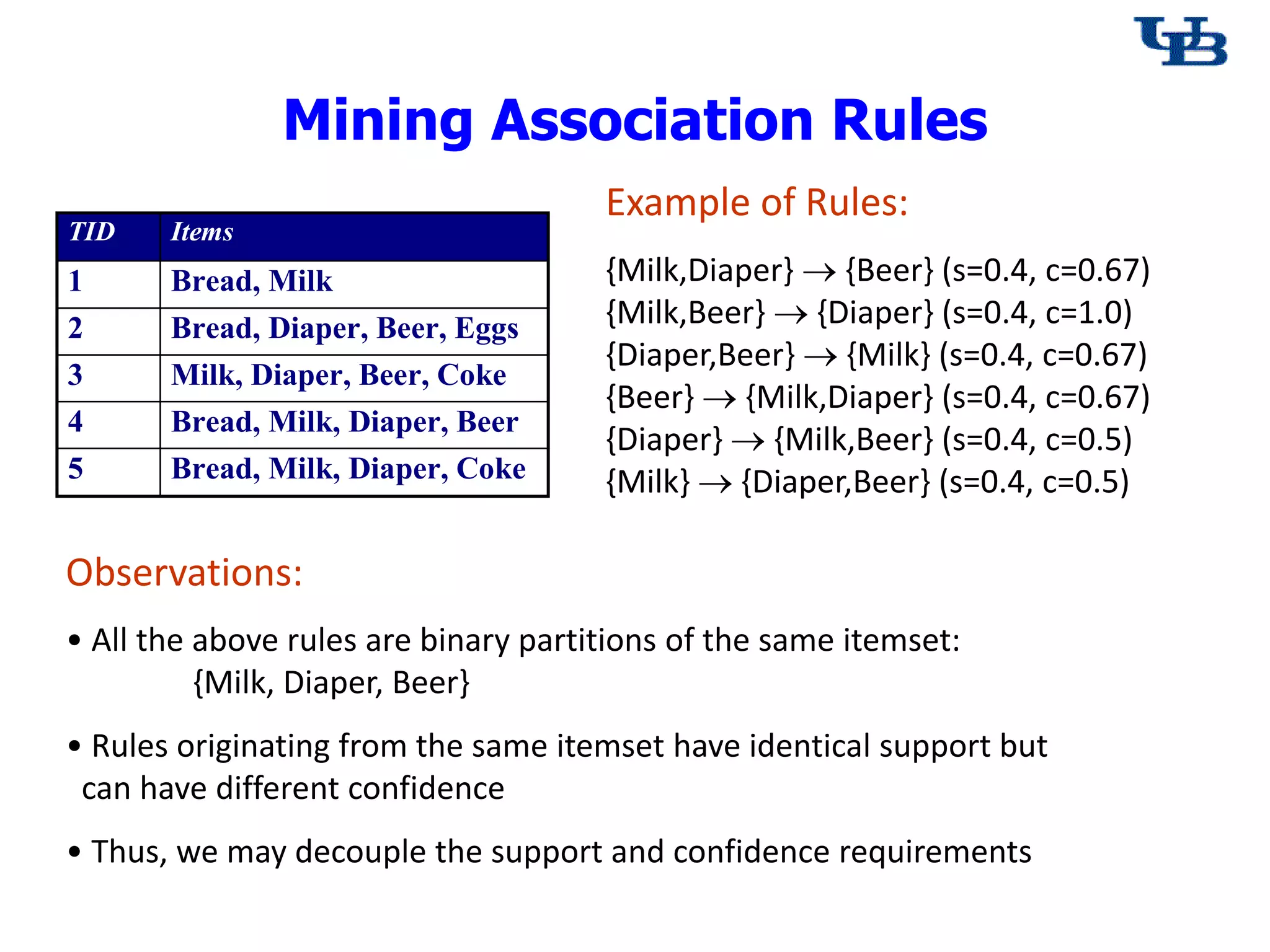

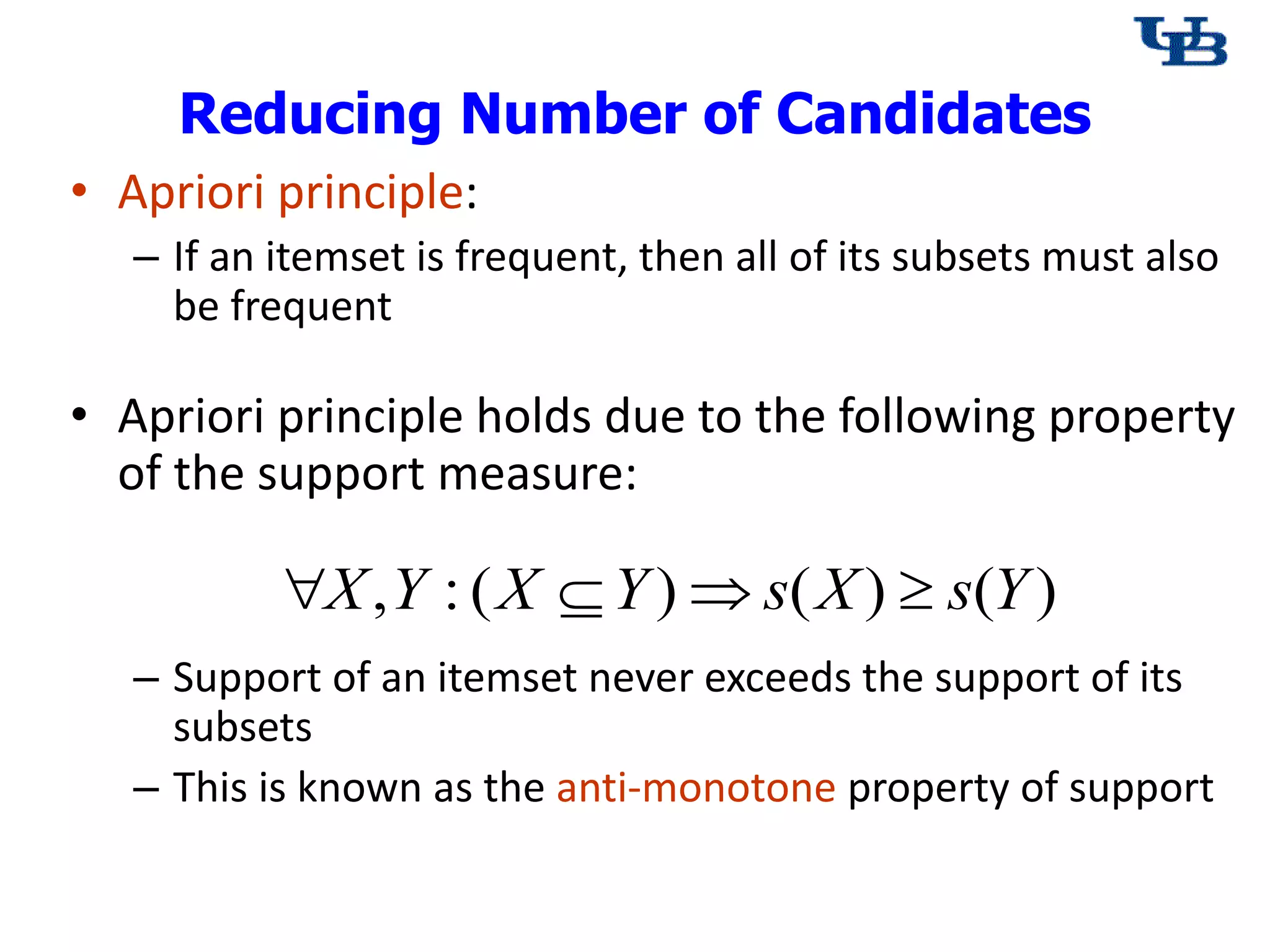

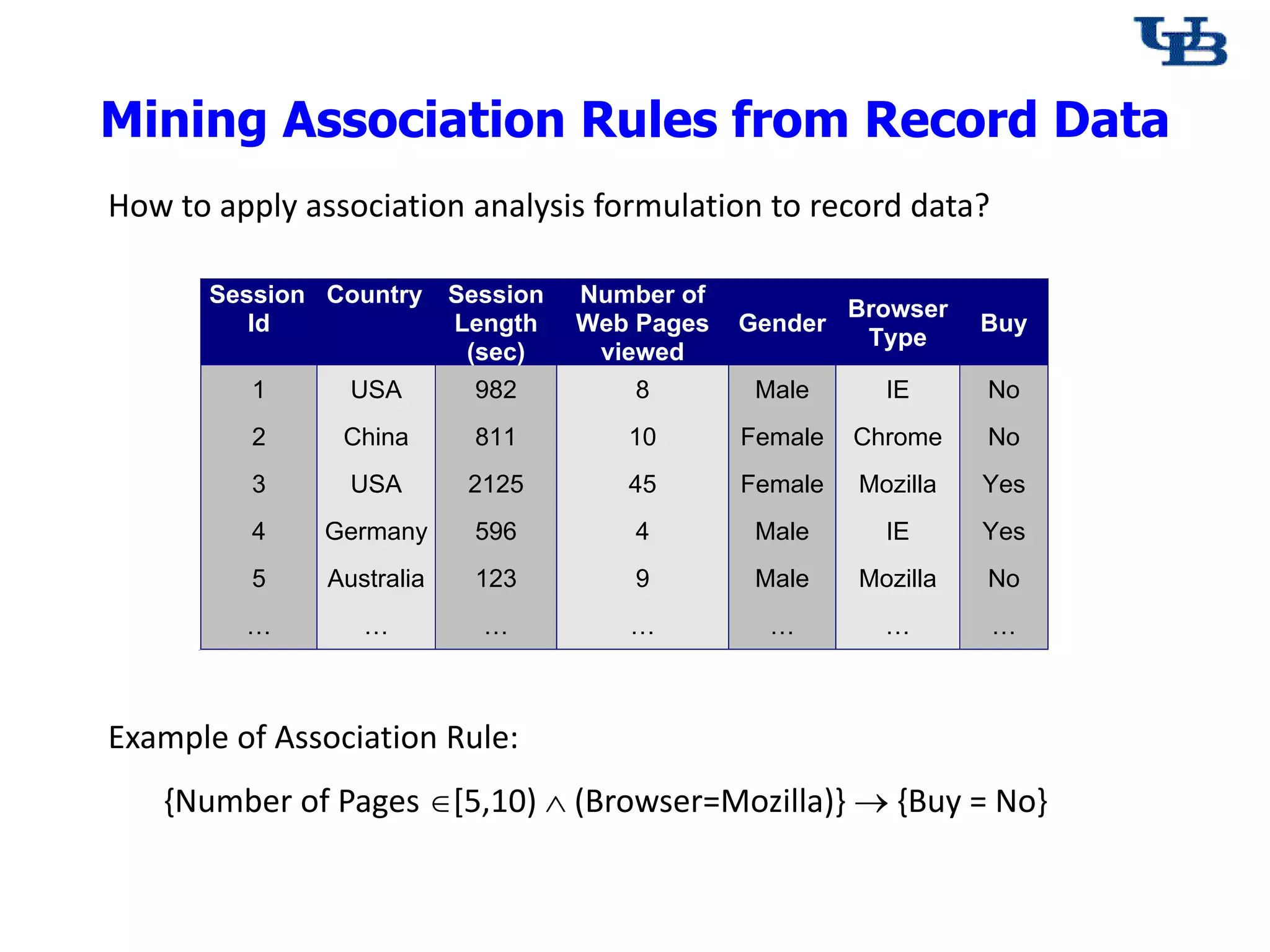

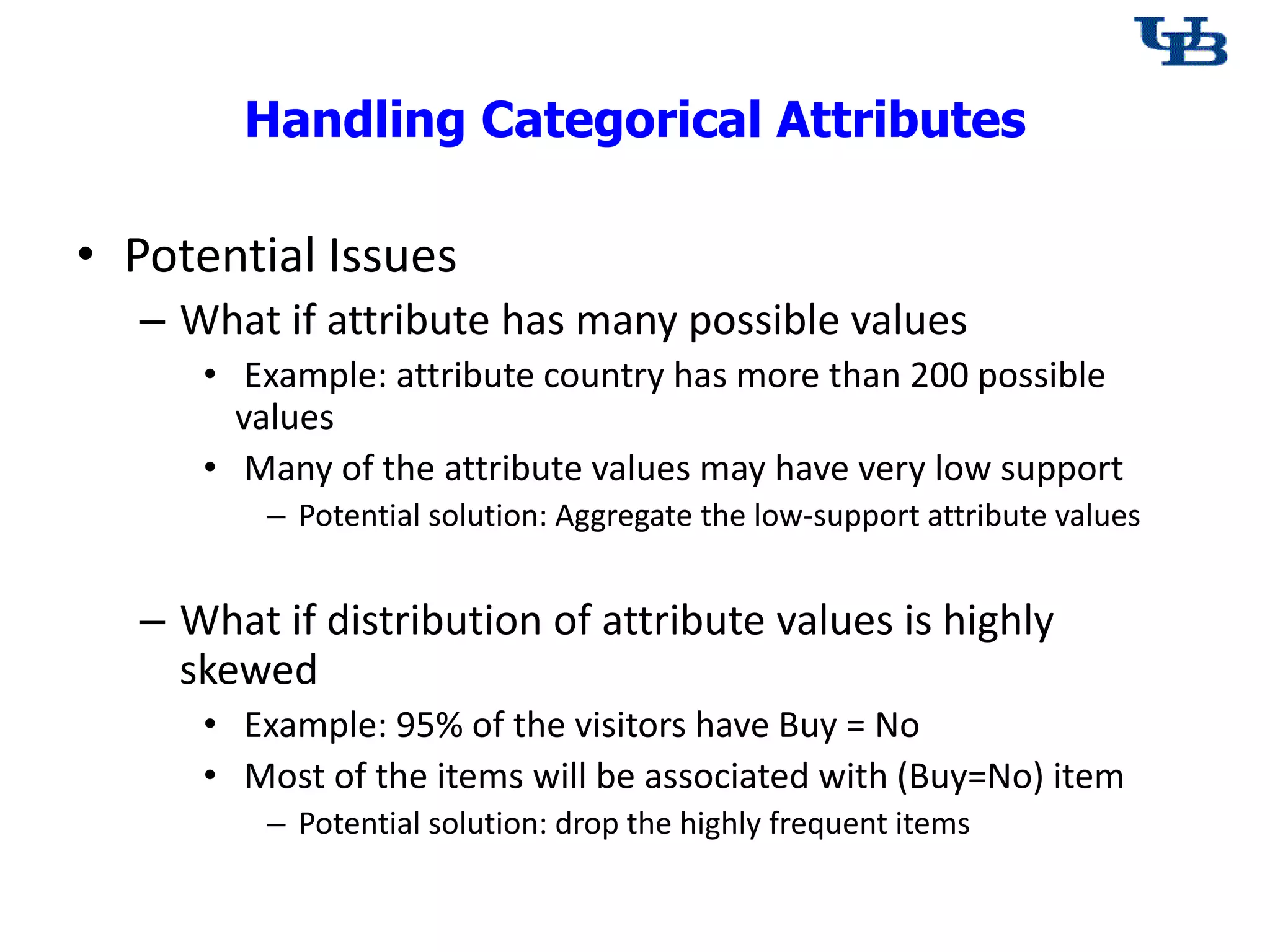

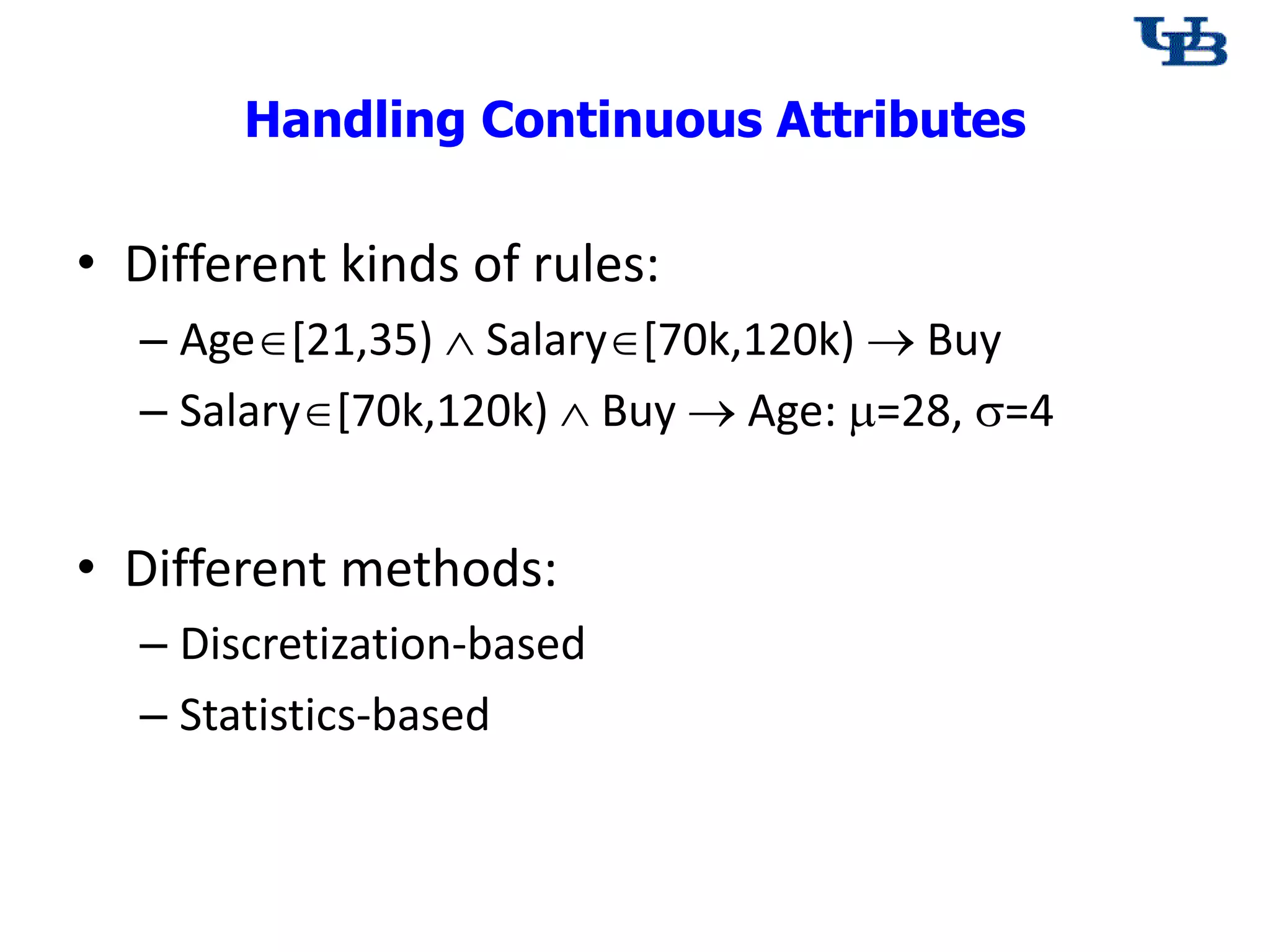

This document discusses association rule mining, which aims to find rules that predict the occurrence of items based on other items in transactions. It defines key concepts like frequent itemsets, support, confidence and association rules. It describes the Apriori algorithm for efficiently mining frequent itemsets by leveraging the anti-monotone property of support. The document also discusses challenges in applying association rule mining to categorical and continuous attributes in record data.