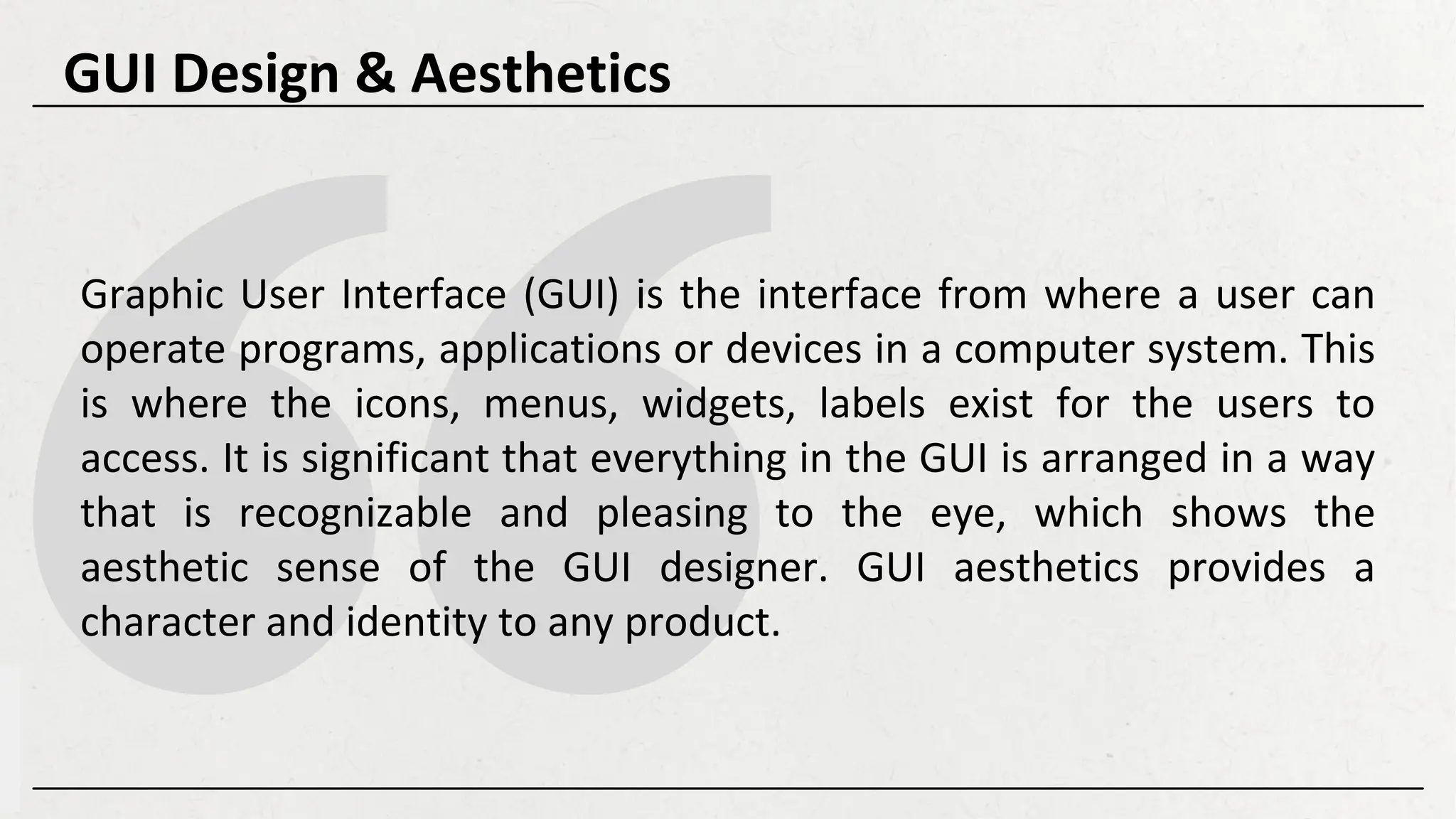

The Midterm phase of the HCI course focuses on design principles, interface development, and user experience strategies that enable students to apply HCI concepts in real-world digital environments. These lessons delve deeper into interaction design, emphasizing how to build intuitive, functional, and aesthetically pleasing interfaces.

Students learn to analyze user needs, create meaningful personas, and design interactive systems that prioritize usability. This stage also introduces usability evaluation techniques and heuristic analysis, equipping students with tools to critically assess interface effectiveness.

![The Invisible Computer by Don Norman

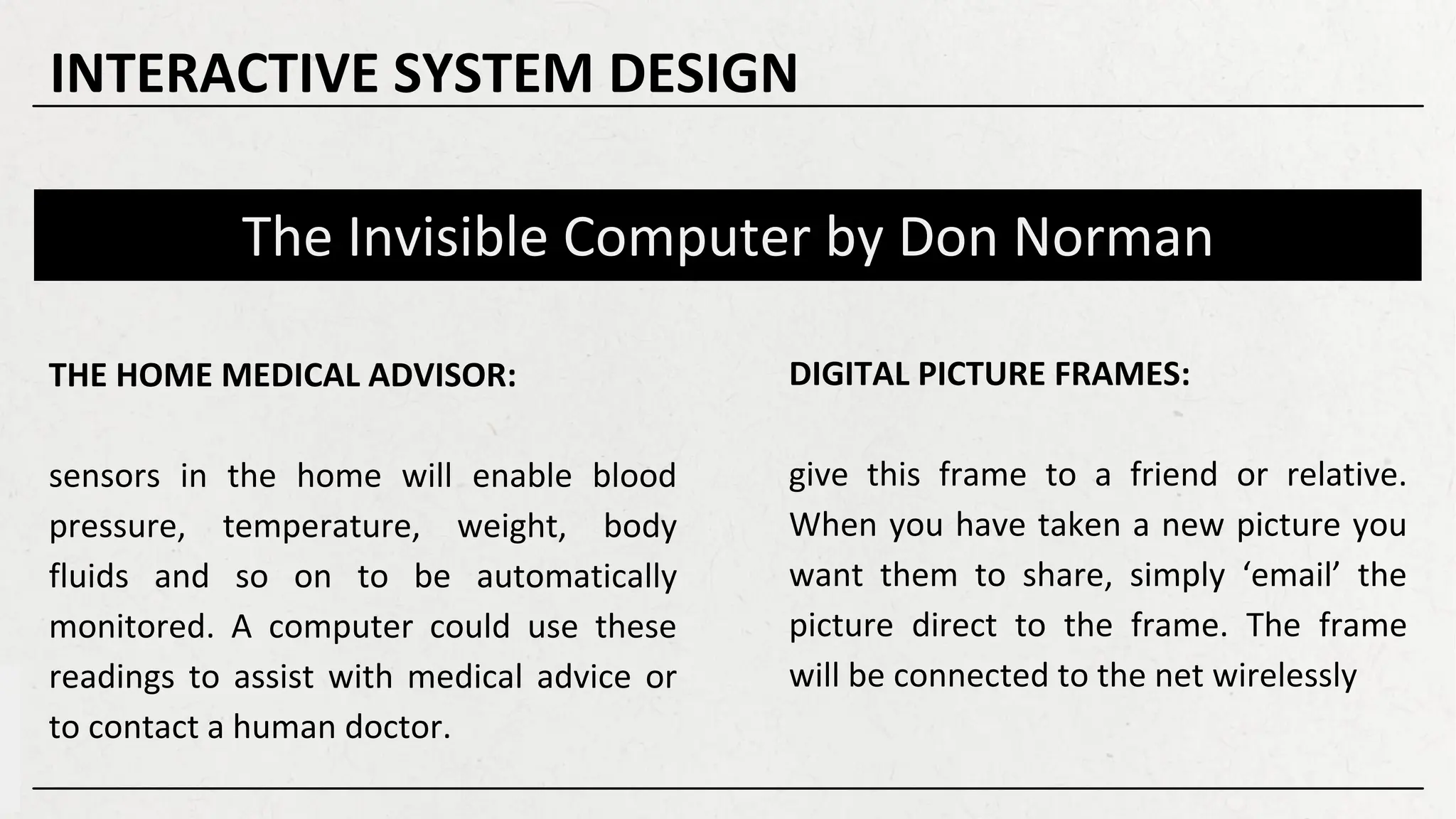

EMBEDDED SYSTEMS WITHIN OUR CLOTHES:

‘consider the value of eyeglass appliances. Many

of us already wear eye glasses … why not

supplant them with more power? Add a small

electronic display to the glasses … and we could

have all sorts of valuable information with us at

all times’ [Norman 99, pg 271-272]

THE WEATHER AND TRAFFIC DISPLAY:

at the moment, when we want the time

we simply look at a clock. Soon, perhaps,

when we want to know the weather or

traffic conditions we will look at a similar

device.

INTERACTIVE SYSTEM DESIGN](https://image.slidesharecdn.com/hci-midtermlessons-250830051149-dba2b8a7/75/Human-Computer-Interaction-Miterm-Lesson-9-2048.jpg)

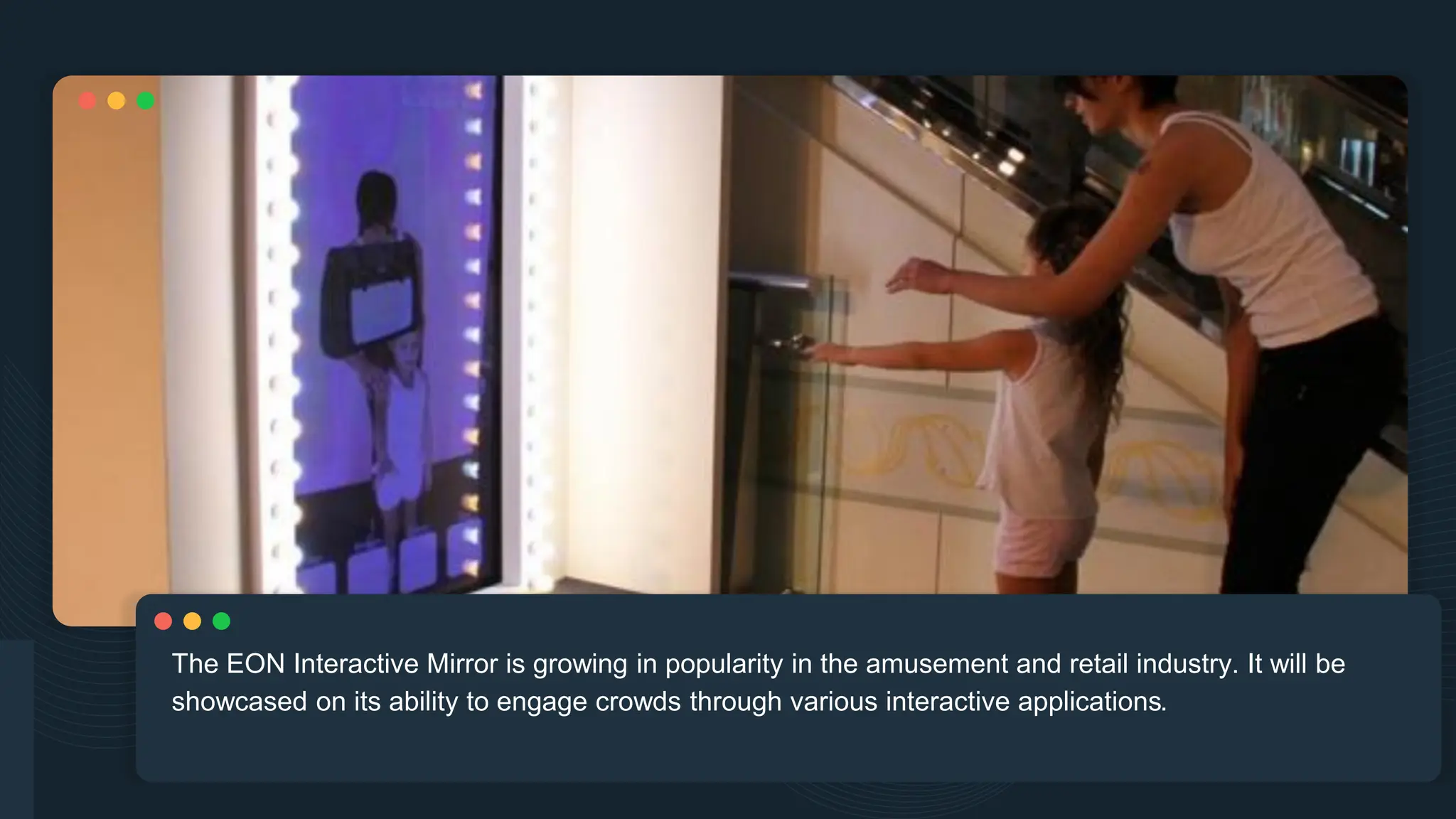

![Response Time

So, it is significant that the response time of a device is faster for which

advanced processors are used in modern devices. According to Jakob

Nielsen, the basic advice regarding response times has been about the

same for thirty years [Miller 1968; Card et al. 1991]:](https://image.slidesharecdn.com/hci-midtermlessons-250830051149-dba2b8a7/75/Human-Computer-Interaction-Miterm-Lesson-67-2048.jpg)

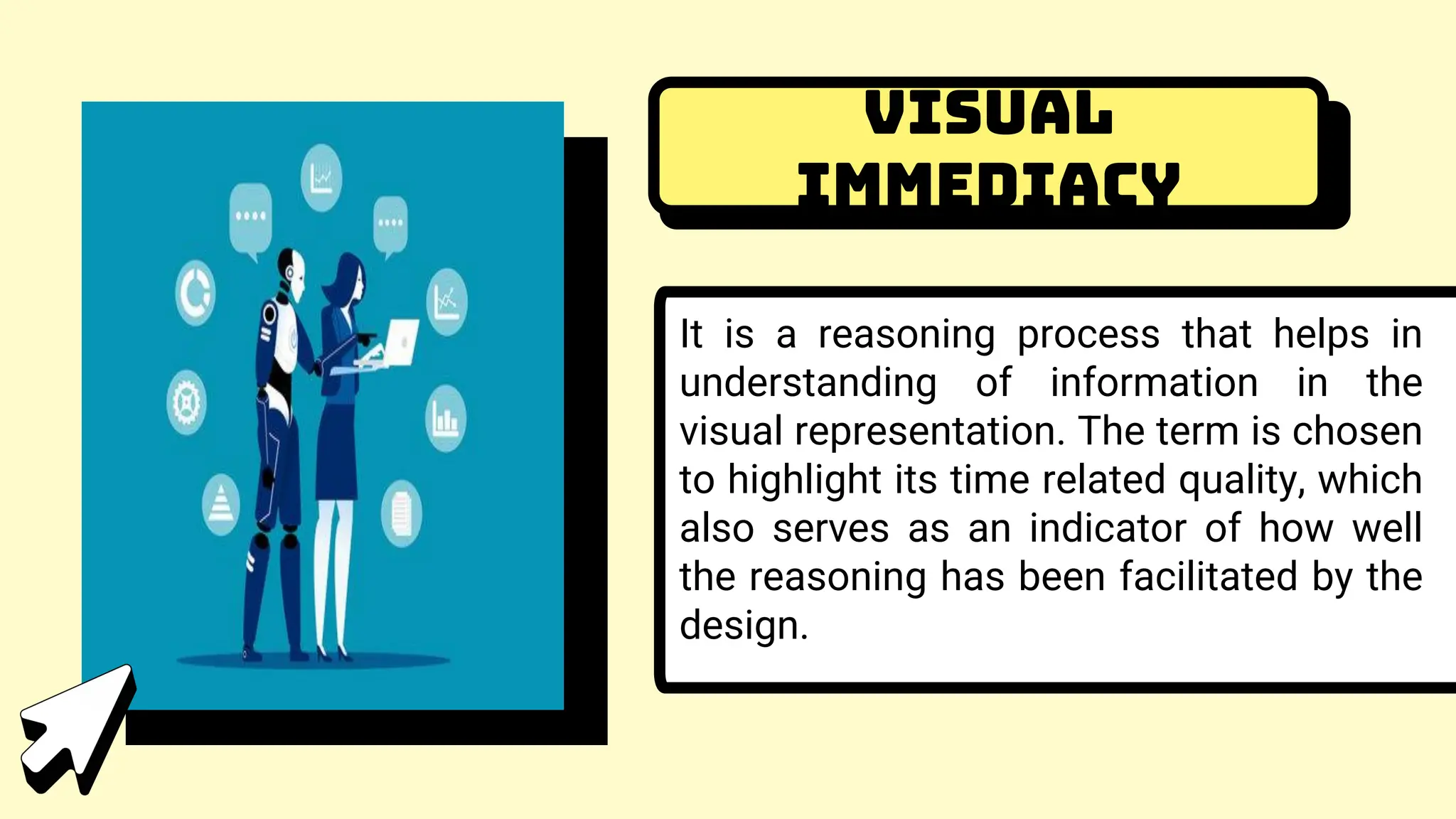

![Petri Nets were developed originally by Carl Adam Petri [Pet62], and

were the subject of his dissertation in 1962. Since then, Petri Nets

and their concepts have been extended and developed, and applied

in a variety of areas: Office automation, work-flows, flexible

manufacturing, programming languages, protocols and networks,

hardware structures, real-time systems, performance evaluation,

operations research, embedded systems, defense systems,

telecommunications, Internet, ecommerce and trading, railway

networks, biological systems. Here is an example of a Petri Net

model, one for the control of a metabolic pathway. Tool used: Visual

Object Net++

Petri nets

Petri Nets](https://image.slidesharecdn.com/hci-midtermlessons-250830051149-dba2b8a7/75/Human-Computer-Interaction-Miterm-Lesson-112-2048.jpg)