---

title: Overview · Cloudflare R2 docs

description: Cloudflare R2 is a cost-effective, scalable object storage solution

for cloud-native apps, web content, and data lakes without egress fees.

lastUpdated: 2025-08-18T14:27:42.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/

md: https://developers.cloudflare.com/r2/index.md

---

Object storage for all your data.

Cloudflare R2 Storage allows developers to store large amounts of unstructured data without the costly egress bandwidth fees associated with typical cloud storage services.

You can use R2 for multiple scenarios, including but not limited to:

* Storage for cloud-native applications

* Cloud storage for web content

* Storage for podcast episodes

* Data lakes (analytics and big data)

* Cloud storage output for large batch processes, such as machine learning model artifacts or datasets

[Get started](https://developers.cloudflare.com/r2/get-started/)

[Browse the examples](https://developers.cloudflare.com/r2/examples/)

***

## Features

### Location Hints

Location Hints are optional parameters you can provide during bucket creation to indicate the primary geographical location you expect data will be accessed from.

[Use Location Hints](https://developers.cloudflare.com/r2/reference/data-location/#location-hints)

### CORS

Configure CORS to interact with objects in your bucket and configure policies on your bucket.

[Use CORS](https://developers.cloudflare.com/r2/buckets/cors/)

### Public buckets

Public buckets expose the contents of your R2 bucket directly to the Internet.

[Use Public buckets](https://developers.cloudflare.com/r2/buckets/public-buckets/)

### Bucket scoped tokens

Create bucket scoped tokens for granular control over who can access your data.

[Use Bucket scoped tokens](https://developers.cloudflare.com/r2/api/tokens/)

***

## Related products

**[Workers](https://developers.cloudflare.com/workers/)**

A [serverless](https://www.cloudflare.com/learning/serverless/what-is-serverless/) execution environment that allows you to create entirely new applications or augment existing ones without configuring or maintaining infrastructure.

**[Stream](https://developers.cloudflare.com/stream/)**

Upload, store, encode, and deliver live and on-demand video with one API, without configuring or maintaining infrastructure.

**[Images](https://developers.cloudflare.com/images/)**

A suite of products tailored to your image-processing needs.

***

## More resources

[Pricing](https://developers.cloudflare.com/r2/pricing)

Understand pricing for free and paid tier rates.

[Discord](https://discord.cloudflare.com)

Ask questions, show off what you are building, and discuss the platform with other developers.

[Twitter](https://x.com/cloudflaredev)

Learn about product announcements, new tutorials, and what is new in Cloudflare Workers.

---

title: 404 - Page Not Found · Cloudflare R2 docs

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/404/

md: https://developers.cloudflare.com/r2/404/index.md

---

# 404

Check the URL, try using our [search](https://developers.cloudflare.com/search/) or try our LLM-friendly [llms.txt directory](https://developers.cloudflare.com/llms.txt).

---

title: API · Cloudflare R2 docs

lastUpdated: 2024-08-30T16:09:27.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/r2/api/

md: https://developers.cloudflare.com/r2/api/index.md

---

* [Authentication](https://developers.cloudflare.com/r2/api/tokens/)

* [Workers API](https://developers.cloudflare.com/r2/api/workers/)

* [S3](https://developers.cloudflare.com/r2/api/s3/)

* [Error codes](https://developers.cloudflare.com/r2/api/error-codes/)

---

title: R2 Data Catalog · Cloudflare R2 docs

description: A managed Apache Iceberg data catalog built directly into R2 buckets.

lastUpdated: 2026-02-02T10:17:46.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/data-catalog/

md: https://developers.cloudflare.com/r2/data-catalog/index.md

---

Note

R2 Data Catalog is in **public beta**, and any developer with an [R2 subscription](https://developers.cloudflare.com/r2/pricing/) can start using it. Currently, outside of standard R2 storage and operations, you will not be billed for your use of R2 Data Catalog.

R2 Data Catalog is a managed [Apache Iceberg](https://iceberg.apache.org/) data catalog built directly into your R2 bucket. It exposes a standard Iceberg REST catalog interface, so you can connect the engines you already use, like [Spark](https://developers.cloudflare.com/r2/data-catalog/config-examples/spark-scala/), [Snowflake](https://developers.cloudflare.com/r2/data-catalog/config-examples/snowflake/), and [PyIceberg](https://developers.cloudflare.com/r2/data-catalog/config-examples/pyiceberg/).

R2 Data Catalog makes it easy to turn an R2 bucket into a data warehouse or lakehouse for a variety of analytical workloads including log analytics, business intelligence, and data pipelines. R2's zero-egress fee model means that data users and consumers can access and analyze data from different clouds, data platforms, or regions without incurring transfer costs.

To get started with R2 Data Catalog, refer to the [R2 Data Catalog: Getting started](https://developers.cloudflare.com/r2/data-catalog/get-started/).

## What is Apache Iceberg?

[Apache Iceberg](https://iceberg.apache.org/) is an open table format designed to handle large-scale analytics datasets stored in object storage. Key features include:

* ACID transactions - Ensures reliable, concurrent reads and writes with full data integrity.

* Optimized metadata - Avoids costly full table scans by using indexed metadata for faster queries.

* Full schema evolution - Allows adding, renaming, and deleting columns without rewriting data.

Iceberg is already [widely supported](https://iceberg.apache.org/vendors/) by engines like Apache Spark, Trino, Snowflake, DuckDB, and ClickHouse, with a fast-growing community behind it.

## Why do you need a data catalog?

Although the Iceberg data and metadata files themselves live directly in object storage (like [R2](https://developers.cloudflare.com/r2/)), the list of tables and pointers to the current metadata need to be tracked centrally by a data catalog.

Think of a data catalog as a library's index system. While books (your data) are physically distributed across shelves (object storage), the index provides a single source of truth about what books exist, their locations, and their latest editions. Without this index, readers (query engines) would waste time searching for books, might access outdated versions, or could accidentally shelve new books in ways that make them unfindable.

Similarly, data catalogs ensure consistent, coordinated access, which allows multiple query engines to safely read from and write to the same tables without conflicts or data corruption.

## Learn more

[Get started ](https://developers.cloudflare.com/r2/data-catalog/get-started/)Learn how to enable the R2 Data Catalog on your bucket, load sample data, and run your first query.

[Managing catalogs ](https://developers.cloudflare.com/r2/data-catalog/manage-catalogs/)Enable or disable R2 Data Catalog on your bucket, retrieve configuration details, and authenticate your Iceberg engine.

[Connect to Iceberg engines ](https://developers.cloudflare.com/r2/data-catalog/config-examples/)Find detailed setup instructions for Apache Spark and other common query engines.

---

title: Data migration · Cloudflare R2 docs

description: Quickly and easily migrate data from other cloud providers to R2.

Explore each option further by navigating to their respective documentation

page.

lastUpdated: 2025-05-15T13:16:23.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/data-migration/

md: https://developers.cloudflare.com/r2/data-migration/index.md

---

Quickly and easily migrate data from other cloud providers to R2. Explore each option further by navigating to their respective documentation page.

| Name | Description | When to use |

| - | - | - |

| [Super Slurper](https://developers.cloudflare.com/r2/data-migration/super-slurper/) | Quickly migrate large amounts of data from other cloud providers to R2. | * For one-time, comprehensive transfers. |

| [Sippy](https://developers.cloudflare.com/r2/data-migration/sippy/) | Incremental data migration, populating your R2 bucket as objects are requested. | - For gradual migration that avoids upfront egress fees.

- To start serving frequently accessed objects from R2 without a full migration. |

For information on how to leverage these tools effectively, refer to [Migration Strategies](https://developers.cloudflare.com/r2/data-migration/migration-strategies/)

---

title: Buckets · Cloudflare R2 docs

description: With object storage, all of your objects are stored in buckets.

Buckets do not contain folders that group the individual files, but instead,

buckets have a flat structure which simplifies the way you access and retrieve

the objects in your bucket.

lastUpdated: 2024-08-13T19:56:56.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/buckets/

md: https://developers.cloudflare.com/r2/buckets/index.md

---

With object storage, all of your objects are stored in buckets. Buckets do not contain folders that group the individual files, but instead, buckets have a flat structure which simplifies the way you access and retrieve the objects in your bucket.

Learn more about bucket level operations from the items below.

* [Bucket locks](https://developers.cloudflare.com/r2/buckets/bucket-locks/)

* [Create new buckets](https://developers.cloudflare.com/r2/buckets/create-buckets/)

* [Configure CORS](https://developers.cloudflare.com/r2/buckets/cors/)

* [Event notifications](https://developers.cloudflare.com/r2/buckets/event-notifications/)

* [Local uploads](https://developers.cloudflare.com/r2/buckets/local-uploads/)

* [Object lifecycles](https://developers.cloudflare.com/r2/buckets/object-lifecycles/)

* [Public buckets](https://developers.cloudflare.com/r2/buckets/public-buckets/)

* [Storage classes](https://developers.cloudflare.com/r2/buckets/storage-classes/)

---

title: Demos and architectures · Cloudflare R2 docs

description: Explore Cloudflare R2 demos and reference architectures for

fullstack applications, storage, and AI, with examples and use cases.

lastUpdated: 2025-10-30T16:19:51.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/demos/

md: https://developers.cloudflare.com/r2/demos/index.md

---

Learn how you can use R2 within your existing application and architecture.

## Demos

Explore the following demo applications for R2.

* [Jobs At Conf:](https://github.com/harshil1712/jobs-at-conf-demo) A job lisiting website to add jobs you find at in-person conferences. Built with Cloudflare Pages, R2, D1, Queues, and Workers AI.

* [Upload Image to R2 starter:](https://github.com/harshil1712/nextjs-r2-demo) Upload images to Cloudflare R2 from a Next.js application.

* [DMARC Email Worker:](https://github.com/cloudflare/dmarc-email-worker) A Cloudflare worker script to process incoming DMARC reports, store them, and produce analytics.

## Reference architectures

Explore the following reference architectures that use R2:

[Fullstack applications](https://developers.cloudflare.com/reference-architecture/diagrams/serverless/fullstack-application/)

[A practical example of how these services come together in a real fullstack application architecture.](https://developers.cloudflare.com/reference-architecture/diagrams/serverless/fullstack-application/)

[Storing user generated content](https://developers.cloudflare.com/reference-architecture/diagrams/storage/storing-user-generated-content/)

[Store user-generated content in R2 for fast, secure, and cost-effective architecture.](https://developers.cloudflare.com/reference-architecture/diagrams/storage/storing-user-generated-content/)

[Optimizing and securing connected transportation systems](https://developers.cloudflare.com/reference-architecture/diagrams/iot/optimizing-and-securing-connected-transportation-systems/)

[This diagram showcases Cloudflare components optimizing connected transportation systems. It illustrates how their technologies minimize latency, ensure reliability, and strengthen security for critical data flow.](https://developers.cloudflare.com/reference-architecture/diagrams/iot/optimizing-and-securing-connected-transportation-systems/)

[Ingesting BigQuery Data into Workers AI](https://developers.cloudflare.com/reference-architecture/diagrams/ai/bigquery-workers-ai/)

[You can connect a Cloudflare Worker to get data from Google BigQuery and pass it to Workers AI, to run AI Models, powered by serverless GPUs.](https://developers.cloudflare.com/reference-architecture/diagrams/ai/bigquery-workers-ai/)

[Event notifications for storage](https://developers.cloudflare.com/reference-architecture/diagrams/storage/event-notifications-for-storage/)

[Use Cloudflare Workers or an external service to monitor for notifications about data changes and then handle them appropriately.](https://developers.cloudflare.com/reference-architecture/diagrams/storage/event-notifications-for-storage/)

[On-demand Object Storage Data Migration](https://developers.cloudflare.com/reference-architecture/diagrams/storage/on-demand-object-storage-migration/)

[Use Cloudflare migration tools to migrate data between cloud object storage providers.](https://developers.cloudflare.com/reference-architecture/diagrams/storage/on-demand-object-storage-migration/)

[Optimizing image delivery with Cloudflare image resizing and R2](https://developers.cloudflare.com/reference-architecture/diagrams/content-delivery/optimizing-image-delivery-with-cloudflare-image-resizing-and-r2/)

[Learn how to get a scalable, high-performance solution to optimizing image delivery.](https://developers.cloudflare.com/reference-architecture/diagrams/content-delivery/optimizing-image-delivery-with-cloudflare-image-resizing-and-r2/)

[Composable AI architecture](https://developers.cloudflare.com/reference-architecture/diagrams/ai/ai-composable/)

[The architecture diagram illustrates how AI applications can be built end-to-end on Cloudflare, or single services can be integrated with external infrastructure and services.](https://developers.cloudflare.com/reference-architecture/diagrams/ai/ai-composable/)

[Serverless ETL pipelines](https://developers.cloudflare.com/reference-architecture/diagrams/serverless/serverless-etl/)

[Cloudflare enables fully serverless ETL pipelines, significantly reducing complexity, accelerating time to production, and lowering overall costs.](https://developers.cloudflare.com/reference-architecture/diagrams/serverless/serverless-etl/)

[Egress-free object storage in multi-cloud setups](https://developers.cloudflare.com/reference-architecture/diagrams/storage/egress-free-storage-multi-cloud/)

[Learn how to use R2 to get egress-free object storage in multi-cloud setups.](https://developers.cloudflare.com/reference-architecture/diagrams/storage/egress-free-storage-multi-cloud/)

[Automatic captioning for video uploads](https://developers.cloudflare.com/reference-architecture/diagrams/ai/ai-video-caption/)

[By integrating automatic speech recognition technology into video platforms, content creators, publishers, and distributors can reach a broader audience, including individuals with hearing impairments or those who prefer to consume content in different languages.](https://developers.cloudflare.com/reference-architecture/diagrams/ai/ai-video-caption/)

[Serverless image content management](https://developers.cloudflare.com/reference-architecture/diagrams/serverless/serverless-image-content-management/)

[Leverage various components of Cloudflare's ecosystem to construct a scalable image management solution](https://developers.cloudflare.com/reference-architecture/diagrams/serverless/serverless-image-content-management/)

---

title: Examples · Cloudflare R2 docs

description: Explore the following examples of how to use SDKs and other tools with R2.

lastUpdated: 2025-08-18T14:27:42.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/examples/

md: https://developers.cloudflare.com/r2/examples/index.md

---

Explore the following examples of how to use SDKs and other tools with R2.

* [Authenticate against R2 API using auth tokens](https://developers.cloudflare.com/r2/examples/authenticate-r2-auth-tokens/)

* [Use the Cache API](https://developers.cloudflare.com/r2/examples/cache-api/)

* [Multi-cloud setup](https://developers.cloudflare.com/reference-architecture/diagrams/storage/egress-free-storage-multi-cloud/)

* [Rclone](https://developers.cloudflare.com/r2/examples/rclone/)

* [S3 SDKs](https://developers.cloudflare.com/r2/examples/aws/)

* [Terraform](https://developers.cloudflare.com/r2/examples/terraform/)

* [Terraform (AWS)](https://developers.cloudflare.com/r2/examples/terraform-aws/)

* [Use SSE-C](https://developers.cloudflare.com/r2/examples/ssec/)

---

title: Get started · Cloudflare R2 docs

description: Create your first R2 bucket and store objects using the dashboard,

S3-compatible tools, or Workers.

lastUpdated: 2026-01-26T20:24:24.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/get-started/

md: https://developers.cloudflare.com/r2/get-started/index.md

---

Cloudflare R2 Storage allows developers to store large amounts of unstructured data without the costly egress bandwidth fees associated with typical cloud storage services.

## Before you begin

You need a Cloudflare account with an R2 subscription. If you do not have one:

1. Go to the [Cloudflare Dashboard](https://dash.cloudflare.com/).

2. Select **Storage & databases > R2 > Overview**

3. Complete the checkout flow to add an R2 subscription to your account.

R2 is free to get started with included free monthly usage. You are billed for your usage on a monthly basis. Refer to [Pricing](https://developers.cloudflare.com/r2/pricing/) for details.

[Go to **Overview**](https://dash.cloudflare.com/?to=/:account/r2/overview)

## Choose how to access R2

R2 supports multiple access methods, so you can choose the one that fits your use case best:

| Method | Use when |

| - | - |

| [Workers API](https://developers.cloudflare.com/r2/get-started/workers-api/) | You are building an application on Cloudflare Workers that needs to read or write from R2 |

| [S3](https://developers.cloudflare.com/r2/get-started/s3/) | You want to use S3-compatible SDKs to interact with R2 in your existing applications |

| [CLI tools](https://developers.cloudflare.com/r2/get-started/cli/) | You want to upload, download, or manage objects from your terminal |

| [Dashboard](https://dash.cloudflare.com/?to=/:account/r2/overview) | You want to quickly view and manage buckets and objects in the browser |

## Next steps

[Workers API ](https://developers.cloudflare.com/r2/get-started/workers-api/)Use R2 from Cloudflare Workers.

[S3 ](https://developers.cloudflare.com/r2/get-started/s3/)Use R2 with S3-compatible SDKs.

[CLI ](https://developers.cloudflare.com/r2/get-started/cli/)Use R2 from the command line.

---

title: How R2 works · Cloudflare R2 docs

description: Find out how R2 works.

lastUpdated: 2026-02-03T04:13:50.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/how-r2-works/

md: https://developers.cloudflare.com/r2/how-r2-works/index.md

---

Cloudflare R2 is an S3-compatible object storage service with no egress fees, built on Cloudflare's global network. It is [strongly consistent](https://developers.cloudflare.com/r2/reference/consistency/) and designed for high [data durability](https://developers.cloudflare.com/r2/reference/durability/).

R2 is ideal for storing and serving unstructured data that needs to be accessed frequently over the internet, without incurring egress fees. It's a good fit for workloads like serving web assets, training AI models, and managing user-generated content.

## Architecture

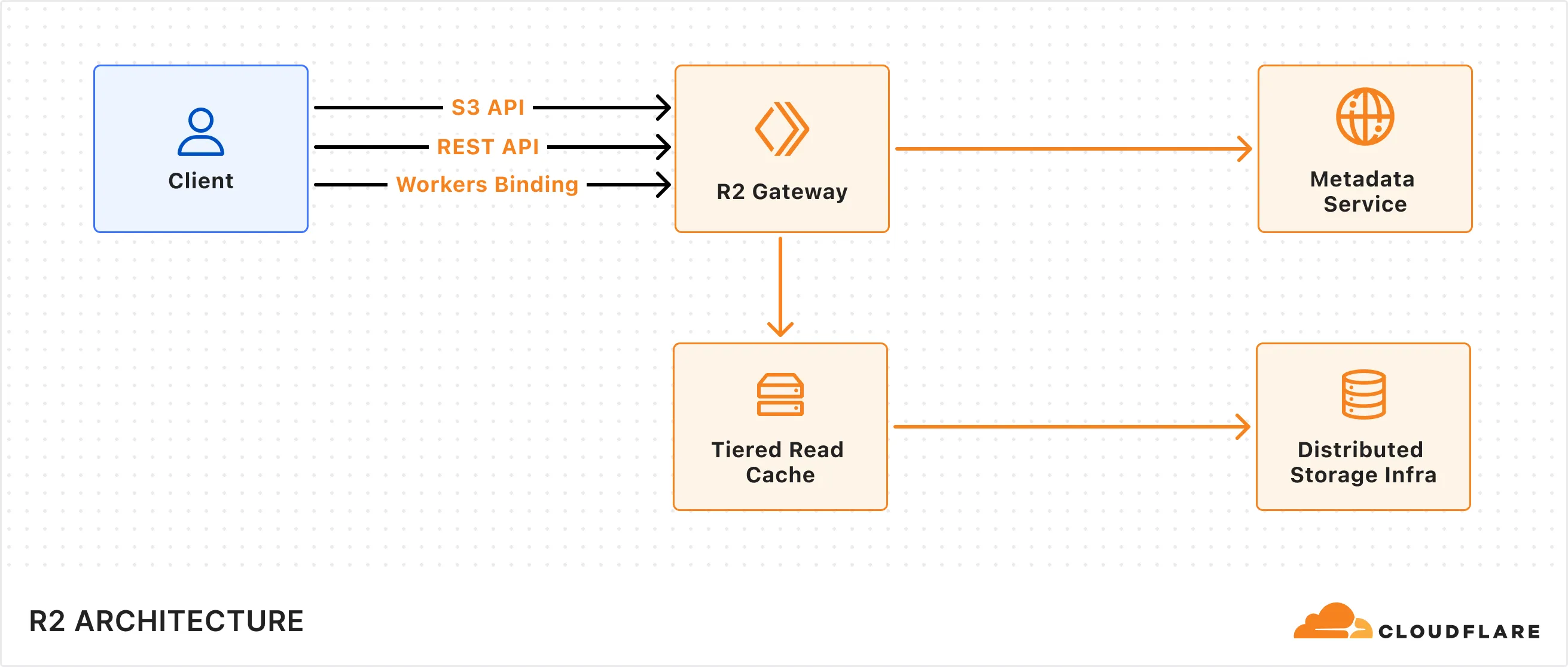

R2's architecture is composed of multiple components:

* **R2 Gateway:** The entry point for all API requests that handles authentication and routing logic. This service is deployed across Cloudflare's global network via [Cloudflare Workers](https://developers.cloudflare.com/workers/).

* **Metadata Service:** A distributed layer built on [Durable Objects](https://developers.cloudflare.com/durable-objects/) used to store and manage object metadata (e.g. object key, checksum) to ensure strong consistency of the object across the storage system. It includes a built-in cache layer to speed up access to metadata.

* **Tiered Read Cache:** A caching layer that sits in front of the Distributed Storage Infrastructure that speeds up object reads by using [Cloudflare Tiered Cache](https://developers.cloudflare.com/cache/how-to/tiered-cache/) to serve data closer to the client.

* **Distributed Storage Infrastructure:** The underlying infrastructure that persistently stores encrypted object data.

R2 supports multiple client interfaces including [Cloudflare Workers Binding](https://developers.cloudflare.com/r2/api/workers/workers-api-usage/), [S3-compatible API](https://developers.cloudflare.com/r2/api/s3/api/), and a [REST API](https://developers.cloudflare.com/api/resources/r2/) that powers the Cloudflare Dashboard and Wrangler CLI. All requests are routed through the R2 Gateway, which coordinates with the Metadata Service and Distributed Storage Infrastructure to retrieve the object data.

## Write data to R2

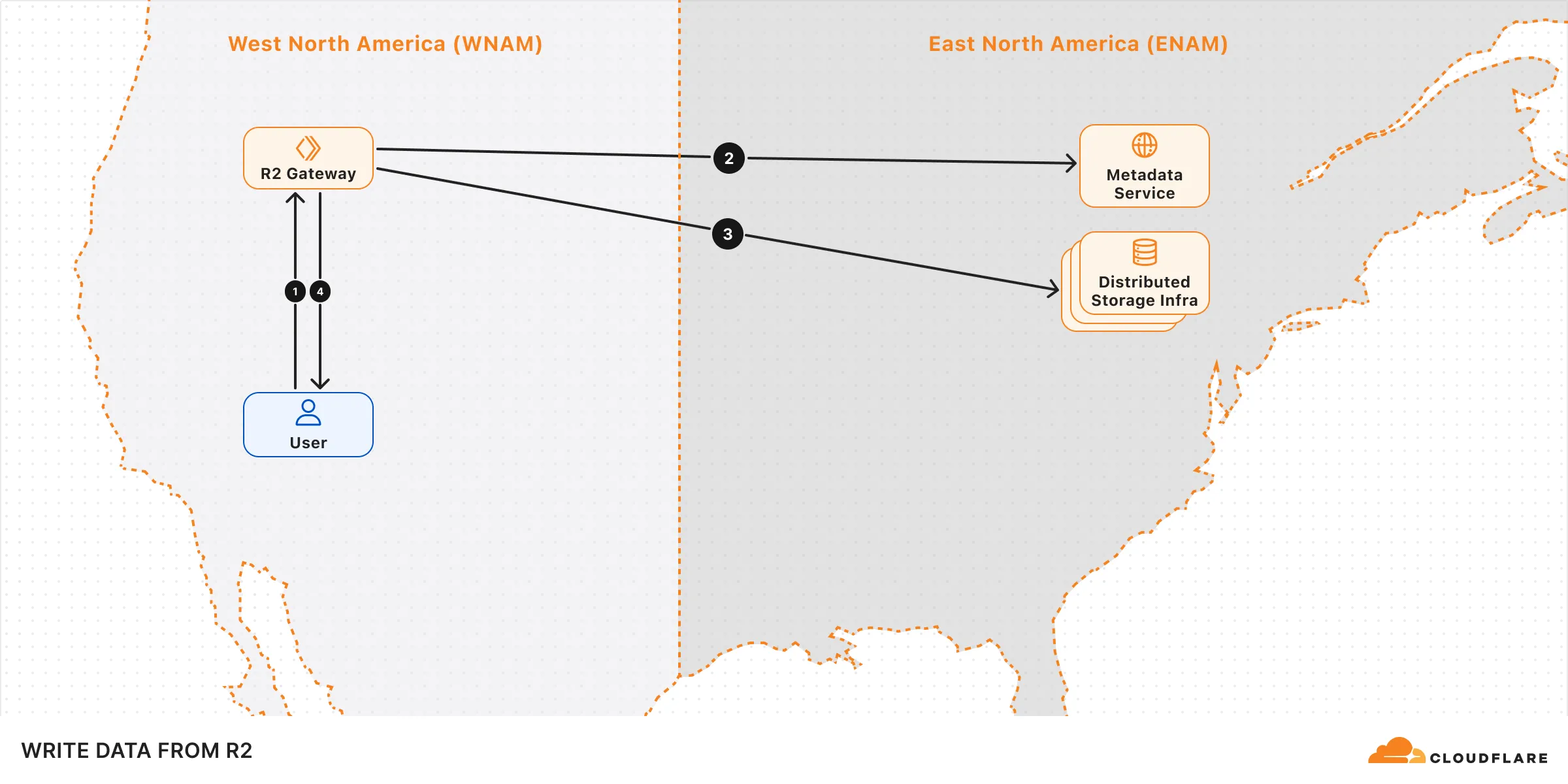

When a write request (e.g. uploading an object) is made to R2, the following sequence occurs:

1. **Request handling:** The request is received by the R2 Gateway at the edge, close to the user, where it is authenticated.

2. **Encryption and routing:** The Gateway reaches out to the Metadata Service to retrieve the [encryption key](https://developers.cloudflare.com/r2/reference/data-security/) and determines which storage cluster to write the encrypted data to within the [location](https://developers.cloudflare.com/r2/reference/data-location/) set for the bucket.

3. **Writing to storage:** The encrypted data is written and stored in the distributed storage infrastructure, and replicated within the region (e.g. ENAM) for [durability](https://developers.cloudflare.com/r2/reference/durability/).

4. **Metadata commit:** Finally, the Metadata Service commits the object's metadata, making it visible in subsequent reads. Only after this commit is an `HTTP 200` success response sent to the client, preventing unacknowledged writes.

## Read data from R2

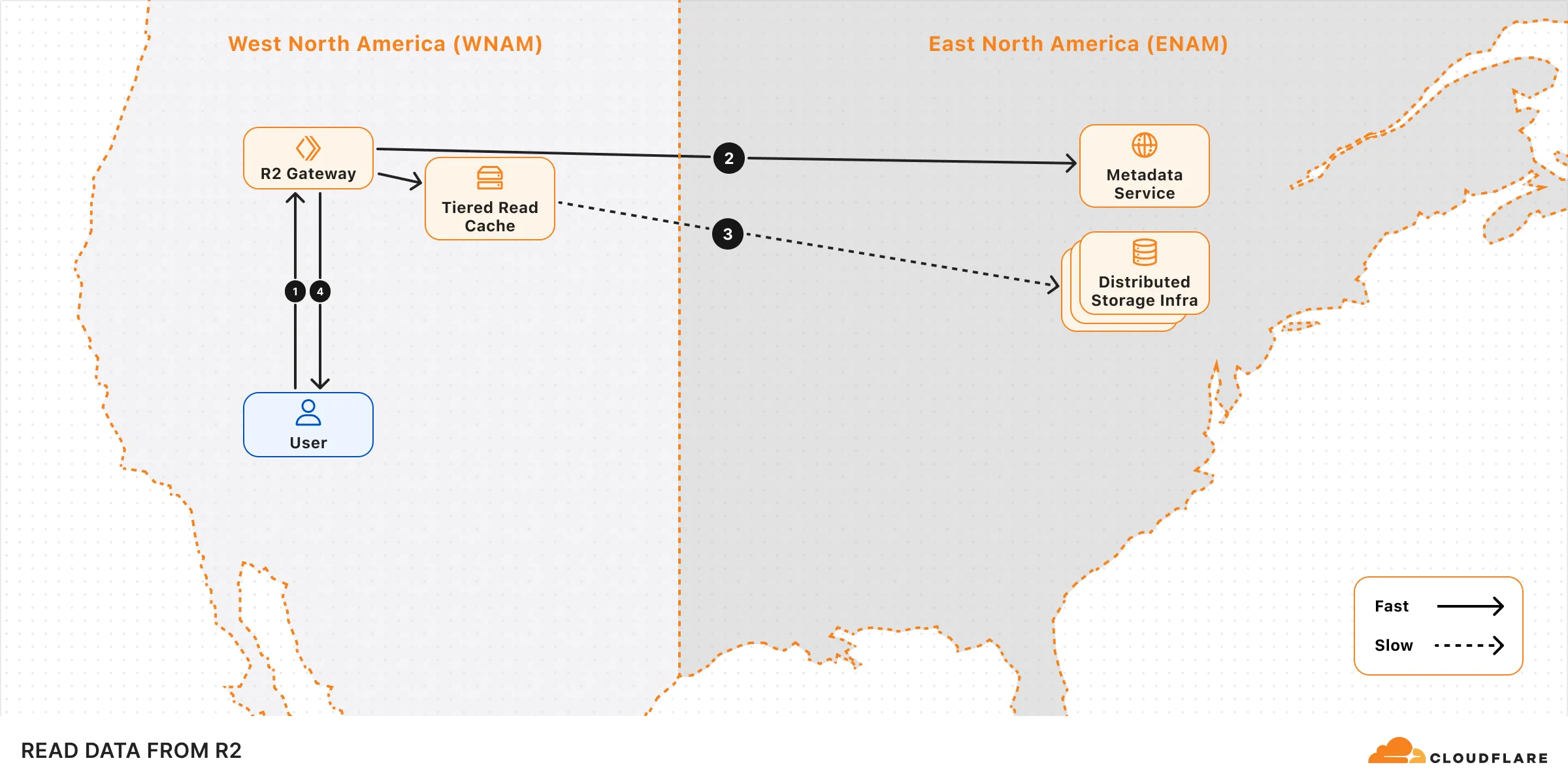

When a read request (e.g. fetching an object) is made to R2, the following sequence occurs:

1. **Request handling:** The request is received by the R2 Gateway at the edge, close to the user, where it is authenticated.

2. **Metadata lookup:** The Gateway asks the Metadata Service for the object metadata.

3. **Reading the object:** The Gateway attempts to retrieve the [encrypted](https://developers.cloudflare.com/r2/reference/data-security/) object from the tiered read cache. If it's not available, it retrieves the object from one of the distributed storage data centers within the region that holds the object data.

4. **Serving to client:** The object is decrypted and served to the user.

## Performance

The performance of your operations can be influenced by factors such as the bucket's geographical location, request origin, and access patterns.

To optimize upload performance for cross-region requests, enable [Local Uploads](https://developers.cloudflare.com/r2/buckets/local-uploads/) on your bucket.

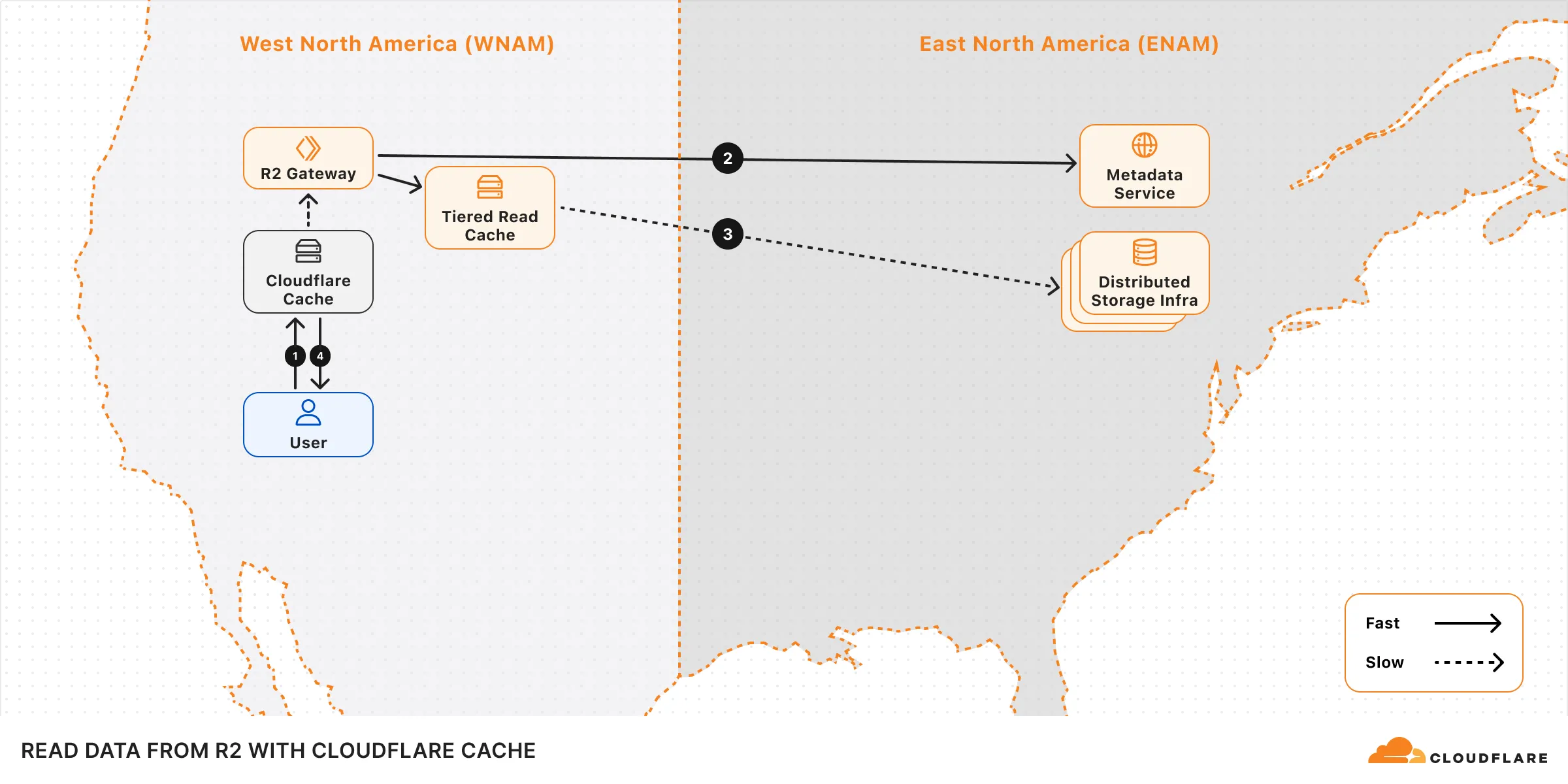

To optimize read performance, enable [Cloudflare Cache](https://developers.cloudflare.com/cache/) when using a [custom domain](https://developers.cloudflare.com/r2/buckets/public-buckets/#custom-domains). When caching is enabled, read requests can bypass the R2 Gateway and be served directly from Cloudflare's edge cache, reducing latency. Note that cached data may not reflect the latest version immediately.

## Learn more

[Consistency ](https://developers.cloudflare.com/r2/reference/consistency/)Learn about R2's consistency model.

[Durability ](https://developers.cloudflare.com/r2/reference/durability/)Learn more about R2's durability guarantee.

[Data location ](https://developers.cloudflare.com/r2/reference/data-location/#jurisdictional-restrictions)Learn how R2 determines where data is stored, and details on jurisdiction restrictions.

[Data security ](https://developers.cloudflare.com/r2/reference/data-security/)Learn about R2's data security properties.

---

title: Objects · Cloudflare R2 docs

description: Objects are individual files or data that you store in an R2 bucket.

lastUpdated: 2025-05-28T15:17:46.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/objects/

md: https://developers.cloudflare.com/r2/objects/index.md

---

Objects are individual files or data that you store in an R2 bucket.

* [Upload objects](https://developers.cloudflare.com/r2/objects/upload-objects/)

* [Download objects](https://developers.cloudflare.com/r2/objects/download-objects/)

* [Delete objects](https://developers.cloudflare.com/r2/objects/delete-objects/)

## Other resources

For information on R2 Workers Binding API, refer to [R2 Workers API reference](https://developers.cloudflare.com/r2/api/workers/workers-api-reference/).

---

title: Platform · Cloudflare R2 docs

lastUpdated: 2025-04-09T22:46:56.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/r2/platform/

md: https://developers.cloudflare.com/r2/platform/index.md

---

---

title: Pricing · Cloudflare R2 docs

description: "R2 charges based on the total volume of data stored, along with

two classes of operations on that data:"

lastUpdated: 2025-09-30T21:55:40.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/pricing/

md: https://developers.cloudflare.com/r2/pricing/index.md

---

R2 charges based on the total volume of data stored, along with two classes of operations on that data:

1. [Class A operations](#class-a-operations) which are more expensive and tend to mutate state.

2. [Class B operations](#class-b-operations) which tend to read existing state.

For the Infrequent Access storage class, [data retrieval](#data-retrieval) fees apply. There are no charges for egress bandwidth for any storage class.

All included usage is on a monthly basis.

Note

To learn about potential cost savings from using R2, refer to the [R2 pricing calculator](https://r2-calculator.cloudflare.com/).

## R2 pricing

| | Standard storage | Infrequent Access storage |

| - | - | - |

| Storage | $0.015 / GB-month | $0.01 / GB-month |

| Class A Operations | $4.50 / million requests | $9.00 / million requests |

| Class B Operations | $0.36 / million requests | $0.90 / million requests |

| Data Retrieval (processing) | None | $0.01 / GB |

| Egress (data transfer to Internet) | Free [1](#user-content-fn-1) | Free [1](#user-content-fn-1) |

Billable unit rounding

Cloudflare rounds up your usage to the next billing unit.

For example:

* If you have performed one million and one operations, you will be billed for two million operations.

* If you have used 1.1 GB-month, you will be billed for 2 GB-month.

* If you have retrieved data (for infrequent access storage) for 1.1 GB, you will be billed for 2 GB.

### Free tier

You can use the following amount of storage and operations each month for free.

| | Free |

| - | - |

| Storage | 10 GB-month / month |

| Class A Operations | 1 million requests / month |

| Class B Operations | 10 million requests / month |

| Egress (data transfer to Internet) | Free [1](#user-content-fn-1) |

Warning

The free tier only applies to Standard storage, and does not apply to Infrequent Access storage.

### Storage usage

Storage is billed using gigabyte-month (GB-month) as the billing metric. A GB-month is calculated by averaging the *peak* storage per day over a billing period (30 days).

For example:

* Storing 1 GB constantly for 30 days will be charged as 1 GB-month.

* Storing 3 GB constantly for 30 days will be charged as 3 GB-month.

* Storing 1 GB for 5 days, then 3 GB for the remaining 25 days will be charged as `1 GB * 5/30 month + 3 GB * 25/30 month = 2.66 GB-month`

For objects stored in Infrequent Access storage, you will be charged for the object for the minimum storage duration even if the object was deleted or moved before the duration specified.

### Class A operations

Class A Operations include `ListBuckets`, `PutBucket`, `ListObjects`, `PutObject`, `CopyObject`, `CompleteMultipartUpload`, `CreateMultipartUpload`, `LifecycleStorageTierTransition`, `ListMultipartUploads`, `UploadPart`, `UploadPartCopy`, `ListParts`, `PutBucketEncryption`, `PutBucketCors` and `PutBucketLifecycleConfiguration`.

### Class B operations

Class B Operations include `HeadBucket`, `HeadObject`, `GetObject`, `UsageSummary`, `GetBucketEncryption`, `GetBucketLocation`, `GetBucketCors` and `GetBucketLifecycleConfiguration`.

### Free operations

Free operations include `DeleteObject`, `DeleteBucket` and `AbortMultipartUpload`.

### Data retrieval

Data retrieval fees apply when you access or retrieve data from the Infrequent Access storage class. This includes any time objects are read or copied.

### Minimum storage duration

For objects stored in Infrequent Access storage, you will be charged for the object for the minimum storage duration even if the object was deleted, moved, or replaced before the specified duration.

| Storage class | Minimum storage duration |

| - | - |

| Standard storage | None |

| Infrequent Access storage | 30 days |

## R2 Data Catalog pricing

R2 Data Catalog is in **public beta**, and any developer with an [R2 subscription](https://developers.cloudflare.com/r2/pricing/) can start using it. Currently, outside of standard R2 storage and operations, you will not be billed for your use of R2 Data Catalog. We will provide at least 30 days' notice before we make any changes or start charging for usage.

To learn more about our thinking on future pricing, refer to the [R2 Data Catalog announcement blog](https://blog.cloudflare.com/r2-data-catalog-public-beta).

## Data migration pricing

### Super Slurper

Super Slurper is free to use. You are only charged for the Class A operations that Super Slurper makes to your R2 bucket. Objects with sizes < 100MiB are uploaded to R2 in a single Class A operation. Larger objects use multipart uploads to increase transfer success rates and will perform multiple Class A operations. Note that your source bucket might incur additional charges as Super Slurper copies objects over to R2.

Once migration completes, you are charged for storage & Class A/B operations as described in previous sections.

### Sippy

Sippy is free to use. You are only charged for the operations Sippy makes to your R2 bucket. If a requested object is not present in R2, Sippy will copy it over from your source bucket. Objects with sizes < 200MiB are uploaded to R2 in a single Class A operation. Larger objects use multipart uploads to increase transfer success rates, and will perform multiple Class A operations. Note that your source bucket might incur additional charges as Sippy copies objects over to R2.

As objects are migrated to R2, they are served from R2, and you are charged for storage & Class A/B operations as described in previous sections.

## Pricing calculator

To learn about potential cost savings from using R2, refer to the [R2 pricing calculator](https://r2-calculator.cloudflare.com/).

## R2 billing examples

### Standard storage example

If a user writes 1,000 objects in R2 **Standard storage** for 1 month with an average size of 1 GB and reads each object 1,000 times during the month, the estimated cost for the month would be:

| | Usage | Free Tier | Billable Quantity | Price |

| - | - | - | - | - |

| Storage | (1,000 objects) \* (1 GB per object) = 1,000 GB-months | 10 GB-months | 990 GB-months | $14.85 |

| Class A Operations | (1,000 objects) \* (1 write per object) = 1,000 writes | 1 million | 0 | $0.00 |

| Class B Operations | (1,000 objects) \* (1,000 reads per object) = 1 million reads | 10 million | 0 | $0.00 |

| Data retrieval (processing) | (1,000 objects) \* (1 GB per object) = 1,000 GB | NA | None | $0.00 |

| **TOTAL** | | | | **$14.85** |

### Infrequent access example

If a user writes 1,000 objects in R2 Infrequent Access storage with an average size of 1 GB, stores them for 5 days, and then deletes them (delete operations are free), and during those 5 days each object is read 1,000 times, the estimated cost for the month would be:

| | Usage | Free Tier | Billable Quantity | Price |

| - | - | - | - | - |

| Storage | (1,000 objects) \* (1 GB per object) = 1,000 GB-months | NA | 1,000 GB-months | $10.00 |

| Class A Operations | (1,000 objects) \* (1 write per object) = 1,000 writes | NA | 1,000 | $9.00 |

| Class B Operations | (1,000 objects) \* (1,000 reads per object) = 1 million reads | NA | 1 million | $0.90 |

| Data retrieval (processing) | (1,000 objects) \* (1 GB per object) = 1,000 GB | NA | 1,000 GB | $10.00 |

| **TOTAL** | | | | **$29.90** |

Note that the minimal storage duration for infrequent access storage is 30 days, which means the billable quantity is 1,000 GB-months, rather than 167 GB-months.

### Asset hosting

If a user writes 100,000 files with an average size of 100 KB object and reads 10,000,000 objects per day, the estimated cost in a month would be:

| | Usage | Free Tier | Billable Quantity | Price |

| - | - | - | - | - |

| Storage | (100,000 objects) \* (100KB per object) | 10 GB-months | 0 GB-months | $0.00 |

| Class A Operations | (100,000 writes) | 1 million | 0 | $0.00 |

| Class B Operations | (10,000,000 reads per day) \* (30 days) | 10 million | 290,000,000 | $104.40 |

| **TOTAL** | | | | **$104.40** |

## Cloudflare billing policy

To learn more about how usage is billed, refer to [Cloudflare Billing Policy](https://developers.cloudflare.com/billing/billing-policy/).

## Frequently asked questions

### Will I be charged for unauthorized requests to my R2 bucket?

No. You are not charged for operations when the caller does not have permission to make the request (HTTP 401 `Unauthorized` response status code).

## Footnotes

1. Egressing directly from R2, including via the [Workers API](https://developers.cloudflare.com/r2/api/workers/), [S3 API](https://developers.cloudflare.com/r2/api/s3/), and [`r2.dev` domains](https://developers.cloudflare.com/r2/buckets/public-buckets/#enable-managed-public-access) does not incur data transfer (egress) charges and is free. If you connect other metered services to an R2 bucket, you may be charged by those services. [↩](#user-content-fnref-1) [↩2](#user-content-fnref-1-2) [↩3](#user-content-fnref-1-3)

---

title: R2 SQL · Cloudflare R2 docs

description: R2 SQL is a serverless SQL interface for Cloudflare R2, enabling

querying and analyzing data.

lastUpdated: 2025-10-30T16:19:51.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/r2-sql/

md: https://developers.cloudflare.com/r2/r2-sql/index.md

---

---

title: Reference · Cloudflare R2 docs

lastUpdated: 2025-04-09T22:46:56.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/r2/reference/

md: https://developers.cloudflare.com/r2/reference/index.md

---

* [Consistency model](https://developers.cloudflare.com/r2/reference/consistency/)

* [Data location](https://developers.cloudflare.com/r2/reference/data-location/)

* [Data security](https://developers.cloudflare.com/r2/reference/data-security/)

* [Durability](https://developers.cloudflare.com/r2/reference/durability/)

* [Unicode interoperability](https://developers.cloudflare.com/r2/reference/unicode-interoperability/)

* [Wrangler commands](https://developers.cloudflare.com/r2/reference/wrangler-commands/)

* [Partners](https://developers.cloudflare.com/r2/reference/partners/)

---

title: Tutorials · Cloudflare R2 docs

description: View tutorials to help you get started with R2.

lastUpdated: 2025-08-14T13:46:41.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/tutorials/

md: https://developers.cloudflare.com/r2/tutorials/index.md

---

View tutorials to help you get started with R2.

## Docs

| Name | Last Updated | Difficulty |

| - | - | - |

| [Build an end to end data pipeline](https://developers.cloudflare.com/r2-sql/tutorials/end-to-end-pipeline/) | 5 months ago | |

| [Point to R2 bucket with a custom domain](https://developers.cloudflare.com/rules/origin-rules/tutorials/point-to-r2-bucket-with-custom-domain/) | 10 months ago | Beginner |

| [Use event notification to summarize PDF files on upload](https://developers.cloudflare.com/r2/tutorials/summarize-pdf/) | over 1 year ago | Intermediate |

| [Use SSE-C](https://developers.cloudflare.com/r2/examples/ssec/) | over 1 year ago | Intermediate |

| [Use R2 as static asset storage with Cloudflare Pages](https://developers.cloudflare.com/pages/tutorials/use-r2-as-static-asset-storage-for-pages/) | over 1 year ago | Intermediate |

| [Create a fine-tuned OpenAI model with R2](https://developers.cloudflare.com/workers/tutorials/create-finetuned-chatgpt-ai-models-with-r2/) | over 1 year ago | Intermediate |

| [Protect an R2 Bucket with Cloudflare Access](https://developers.cloudflare.com/r2/tutorials/cloudflare-access/) | almost 2 years ago | |

| [Log and store upload events in R2 with event notifications](https://developers.cloudflare.com/r2/tutorials/upload-logs-event-notifications/) | almost 2 years ago | Beginner |

| [Use Cloudflare R2 as a Zero Trust log destination](https://developers.cloudflare.com/cloudflare-one/tutorials/r2-logs/) | about 2 years ago | Beginner |

| [Deploy a Browser Rendering Worker with Durable Objects](https://developers.cloudflare.com/browser-rendering/workers-bindings/browser-rendering-with-do/) | over 2 years ago | Beginner |

| [Securely access and upload assets with Cloudflare R2](https://developers.cloudflare.com/workers/tutorials/upload-assets-with-r2/) | over 2 years ago | Beginner |

| [Mastodon](https://developers.cloudflare.com/r2/tutorials/mastodon/) | about 3 years ago | Beginner |

| [Postman](https://developers.cloudflare.com/r2/tutorials/postman/) | over 3 years ago | |

## Videos

Welcome to the Cloudflare Developer Channel

Welcome to the Cloudflare Developers YouTube channel. We've got tutorials and working demos and everything you need to level up your projects. Whether you're working on your next big thing or just dorking around with some side projects, we've got you covered! So why don't you come hang out, subscribe to our developer channel and together we'll build something awesome. You're gonna love it.

Optimize your AI App & fine-tune models (AI Gateway, R2)

In this workshop, Kristian Freeman, Cloudflare Developer Advocate, shows how to optimize your existing AI applications with Cloudflare AI Gateway, and how to finetune OpenAI models using R2.

---

title: Videos · Cloudflare R2 docs

lastUpdated: 2025-06-05T08:11:08.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/video-tutorials/

md: https://developers.cloudflare.com/r2/video-tutorials/index.md

---

[Introduction to R2 ](https://developers.cloudflare.com/learning-paths/r2-intro/series/r2-1/)Learn about Cloudflare R2, an object storage solution designed to handle your data and files efficiently. It is ideal for storing large media files, creating data lakes, or delivering web assets.

---

title: Error codes · Cloudflare R2 docs

description: This page documents error codes returned by R2 when using the

Workers API or the S3-compatible API, along with recommended fixes to help

with troubleshooting.

lastUpdated: 2026-02-13T12:50:29.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/api/error-codes/

md: https://developers.cloudflare.com/r2/api/error-codes/index.md

---

This page documents error codes returned by R2 when using the [Workers API](https://developers.cloudflare.com/r2/api/workers/) or the [S3-compatible API](https://developers.cloudflare.com/r2/api/s3/), along with recommended fixes to help with troubleshooting.

## How errors are returned

For the **Workers API**, R2 operations throw exceptions that you can catch. The error code is included at the end of the `message` property:

```js

try {

await env.MY_BUCKET.put("my-key", data, { customMetadata: largeMetadata });

} catch (error) {

console.error(error.message);

// "put: Your metadata headers exceed the maximum allowed metadata size. (10012)"

}

```

For the **S3-compatible API**, errors are returned as XML in the response body:

```xml

NoSuchKey

The specified key does not exist.

```

## Error code reference

### Authentication and authorization errors

| Error Code | S3 Code | HTTP Status | Details | Recommended Fix |

| - | - | - | - | - |

| 10002 | Unauthorized | 401 | Missing or invalid authentication credentials. | Verify your [API token](https://developers.cloudflare.com/r2/api/tokens/) or access key credentials are correct and have not expired. |

| 10003 | AccessDenied | 403 | Insufficient permissions for the requested operation. | Check that your [API token](https://developers.cloudflare.com/r2/api/tokens/) has the required permissions for the bucket and operation. |

| 10018 | ExpiredRequest | 400 | Presigned URL or request signature has expired. | Regenerate the [presigned URL](https://developers.cloudflare.com/r2/api/s3/presigned-urls/) or signature. |

| 10035 | SignatureDoesNotMatch | 403 | Request signature does not match calculated signature. | Verify your secret key and signing algorithm. Check for URL encoding issues. |

| 10042 | NotEntitled | 403 | Account not entitled to this feature. | Ensure your account has an [R2 subscription](https://developers.cloudflare.com/r2/pricing/). |

### Bucket errors

| Error Code | S3 Code | HTTP Status | Details | Recommended Fix |

| - | - | - | - | - |

| 10005 | InvalidBucketName | 400 | Bucket name does not meet naming requirements. | Bucket names must be 3-63 chars, lowercase alphanumeric and hyphens, start/end with alphanumeric. |

| 10006 | NoSuchBucket | 404 | The specified bucket does not exist. | Verify the bucket name is correct and the bucket exists in your account. |

| 10008 | BucketNotEmpty | 409 | Cannot delete bucket that contains objects. | Delete all objects in the bucket before deleting the bucket. |

| 10009 | TooManyBuckets | 400 | Account bucket limit exceeded (default: 1,000,000 buckets). | Request a limit increase via the [Limits Increase Request Form](https://forms.gle/ukpeZVLWLnKeixDu7). |

| 10073 | BucketConflict | 409 | Bucket name already exists. | Choose a different bucket name. Bucket names must be unique within your account. |

### Object errors

| Error Code | S3 Code | HTTP Status | Details | Recommended Fix |

| - | - | - | - | - |

| 10007 | NoSuchKey | 404 | The specified object key does not exist. For the [Workers API](https://developers.cloudflare.com/r2/api/workers/workers-api-reference/), `get()` and `head()` return `null` instead of throwing. | Verify the object key is correct and the object has not been deleted. |

| 10020 | InvalidObjectName | 400 | Object key contains invalid characters or is too long. | Use valid UTF-8 characters. Maximum key length is 1024 bytes. |

| 100100 | EntityTooLarge | 400 | Object exceeds maximum size (5 GiB for single upload, 5 TiB for multipart). | Use [multipart upload](https://developers.cloudflare.com/r2/objects/upload-objects/#multipart-upload) for objects larger than 5 GiB. Maximum object size is 5 TiB. |

| 10012 | MetadataTooLarge | 400 | Custom metadata exceeds the 8,192 byte limit. | Reduce custom metadata size. Maximum is 8,192 bytes total for all custom metadata. |

| 10069 | ObjectLockedByBucketPolicy | 403 | Object is protected by a bucket lock rule and cannot be modified or deleted. | Wait for the retention period to expire. Refer to [bucket locks](https://developers.cloudflare.com/r2/buckets/bucket-locks/). |

### Upload and request errors

| Error Code | S3 Code | HTTP Status | Details | Recommended Fix |

| - | - | - | - | - |

| 10033 | MissingContentLength | 411 | `Content-Length` header required but missing. | Include the `Content-Length` header in PUT/POST requests. |

| 10013 | IncompleteBody | 400 | Request body terminated before expected `Content-Length`. | Ensure the full request body is sent. Check for network interruptions or client timeouts. |

| 10014 | InvalidDigest | 400 | Checksum header format is malformed. | Ensure checksums are properly encoded (base64 for SHA/CRC checksums). |

| 10037 | BadDigest | 400 | Provided checksum does not match the uploaded content. | Verify data integrity and retry the upload. |

| 10039 | InvalidRange | 416 | Requested byte range is not satisfiable. | Ensure the range start is less than object size. Check `Range` header format. |

| 10031 | PreconditionFailed | 412 | Conditional headers (`If-Match`, `If-Unmodified-Since`, etc.) were not satisfied. | Object's ETag or modification time does not match your condition. Refetch and retry. Refer to [conditional operations](https://developers.cloudflare.com/r2/api/s3/extensions/#conditional-operations-in-putobject). |

### Multipart upload errors

| Error Code | S3 Code | HTTP Status | Details | Recommended Fix |

| - | - | - | - | - |

| 10011 | EntityTooSmall | 400 | Multipart part is below minimum size (5 MiB), except for the last part. | Ensure each part (except the last) is at least 5 MiB. |

| 10024 | NoSuchUpload | 404 | Multipart upload does not exist or was aborted. | Verify the `uploadId` is correct. By default, incomplete multipart uploads expire after 7 days. Refer to [object lifecycles](https://developers.cloudflare.com/r2/buckets/object-lifecycles/). |

| 10025 | InvalidPart | 400 | One or more parts could not be found when completing the upload. | Verify each part was uploaded successfully and use the exact ETag returned from `UploadPart`. |

| 10048 | InvalidPart | 400 | All non-trailing parts must have the same size. | Ensure all parts except the last have identical sizes. R2 requires uniform part sizes for multipart uploads. |

### Service errors

| Error Code | S3 Code | HTTP Status | Details | Recommended Fix |

| - | - | - | - | - |

| 10001 | InternalError | 500 | An internal error occurred. | Retry the request. If persistent, check [Cloudflare Status](https://www.cloudflarestatus.com) or contact support. |

| 10043 | ServiceUnavailable | 503 | Service is temporarily unavailable. | Retry with exponential backoff. Check [Cloudflare Status](https://www.cloudflarestatus.com). |

| 10054 | ClientDisconnect | 400 | Client disconnected before request completed. | Check network connectivity and retry. |

| 10058 | TooManyRequests | 429 | Rate limit exceeded. Often caused by multiple concurrent requests to the same object key (limit: 1 write/second per key). | Check if multiple clients are accessing the same object key. See [R2 limits](https://developers.cloudflare.com/r2/platform/limits/). |

---

title: S3 · Cloudflare R2 docs

lastUpdated: 2025-12-29T18:01:22.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/r2/api/s3/

md: https://developers.cloudflare.com/r2/api/s3/index.md

---

* [S3 API compatibility](https://developers.cloudflare.com/r2/api/s3/api/)

* [Extensions](https://developers.cloudflare.com/r2/api/s3/extensions/)

* [Presigned URLs](https://developers.cloudflare.com/r2/api/s3/presigned-urls/)

---

title: Authentication · Cloudflare R2 docs

description: You can generate an API token to serve as the Access Key for usage

with existing S3-compatible SDKs or XML APIs.

lastUpdated: 2026-02-06T11:10:34.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/api/tokens/

md: https://developers.cloudflare.com/r2/api/tokens/index.md

---

You can generate an API token to serve as the Access Key for usage with existing S3-compatible SDKs or XML APIs.

Note

This page contains instructions on generating API tokens *specifically* for R2. Note that this is different from generating API tokens for other services, as documented in [Create API token](https://developers.cloudflare.com/fundamentals/api/get-started/create-token/).

You must purchase R2 before you can generate an API token.

To create an API token:

1. In the Cloudflare dashboard, go to the **R2 object storage** page.

[Go to **Overview**](https://dash.cloudflare.com/?to=/:account/r2/overview)

2. Select **Manage R2 API tokens**.

3. Choose to create either:

* **Create Account API token** - These tokens are tied to the Cloudflare account itself and can be used by any authorized system or user. Only users with the Super Administrator role can view or create them. These tokens remain valid until manually revoked.

* **Create User API token** - These tokens are tied to your individual Cloudflare user. They inherit your personal permissions and become inactive if your user is removed from the account.

4. Under **Permissions**, choose a permission types for your token. Refer to [Permissions](#permissions) for information about each option.

5. (Optional) If you select the **Object Read and Write** or **Object Read** permissions, you can scope your token to a set of buckets.

6. Select **Create Account API token** or **Create User API token**.

After your token has been successfully created, review your **Secret Access Key** and **Access Key ID** values. These may often be referred to as Client Secret and Client ID, respectively.

Warning

You will not be able to access your **Secret Access Key** again after this step. Copy and record both values to avoid losing them.

You will also need to configure the `endpoint` in your S3 client to `https://.r2.cloudflarestorage.com`.

Find your [account ID in the Cloudflare dashboard](https://developers.cloudflare.com/fundamentals/account/find-account-and-zone-ids/).

Buckets created with jurisdictions must be accessed via jurisdiction-specific endpoints:

* European Union (EU): `https://.eu.r2.cloudflarestorage.com`

* FedRAMP: `https://.fedramp.r2.cloudflarestorage.com`

Warning

Jurisdictional buckets can only be accessed via the corresponding jurisdictional endpoint. Most S3 clients will not let you configure multiple `endpoints`, so you'll generally have to initialize one client per jurisdiction.

## Permissions

| Permission | Description |

| - | - |

| Admin Read & Write | Allows the ability to create, list, and delete buckets, edit bucket configuration, read, write, and list objects, and read and write to data catalog tables and associated metadata. |

| Admin Read only | Allows the ability to list buckets and view bucket configuration, read and list objects, and read from the data catalog tables and associated metadata. |

| Object Read & Write | Allows the ability to read, write, and list objects in specific buckets. |

| Object Read only | Allows the ability to read and list objects in specific buckets. |

Note

Currently **Admin Read & Write** or **Admin Read only** permission is required to use [R2 Data Catalog](https://developers.cloudflare.com/r2/data-catalog/).

## Create API tokens via API

You can create API tokens via the API and use them to generate corresponding Access Key ID and Secret Access Key values. To get started, refer to [Create API tokens via the API](https://developers.cloudflare.com/fundamentals/api/how-to/create-via-api/). Below are the specifics for R2.

### Access Policy

An Access Policy specifies what resources the token can access and the permissions it has.

#### Resources

There are two relevant resource types for R2: `Account` and `Bucket`. For more information on the Account resource type, refer to [Account](https://developers.cloudflare.com/fundamentals/api/how-to/create-via-api/#account).

##### Bucket

Include a set of R2 buckets or all buckets in an account.

A specific bucket is represented as:

```json

"com.cloudflare.edge.r2.bucket.__": "*"

```

* `ACCOUNT_ID`: Refer to [Find zone and account IDs](https://developers.cloudflare.com/fundamentals/account/find-account-and-zone-ids/#find-account-id-workers-and-pages).

* `JURISDICTION`: The [jurisdiction](https://developers.cloudflare.com/r2/reference/data-location/#available-jurisdictions) where the R2 bucket lives. For buckets not created in a specific jurisdiction this value will be `default`.

* `BUCKET_NAME`: The name of the bucket your Access Policy applies to.

All buckets in an account are represented as:

```json

"com.cloudflare.api.account.": {

"com.cloudflare.edge.r2.bucket.*": "*"

}

```

* `ACCOUNT_ID`: Refer to [Find zone and account IDs](https://developers.cloudflare.com/fundamentals/account/find-account-and-zone-ids/#find-account-id-workers-and-pages).

#### Permission groups

Determine what [permission groups](https://developers.cloudflare.com/fundamentals/api/how-to/create-via-api/#permission-groups) should be applied.

| Permission group | Resource | Description |

| - | - | - |

| `Workers R2 Storage Write` | Account | Can create, delete, and list buckets, edit bucket configuration, and read, write, and list objects. |

| `Workers R2 Storage Read` | Account | Can list buckets and view bucket configuration, and read and list objects. |

| `Workers R2 Storage Bucket Item Write` | Bucket | Can read, write, and list objects in buckets. |

| `Workers R2 Storage Bucket Item Read` | Bucket | Can read and list objects in buckets. |

| `Workers R2 Data Catalog Write` | Account | Can read from and write to data catalogs. This permission allows access to the Iceberg REST catalog interface. |

| `Workers R2 Data Catalog Read` | Account | Can read from data catalogs. This permission allows read-only access to the Iceberg REST catalog interface. |

#### Example Access Policy

```json

[

{

"id": "f267e341f3dd4697bd3b9f71dd96247f",

"effect": "allow",

"resources": {

"com.cloudflare.edge.r2.bucket.4793d734c0b8e484dfc37ec392b5fa8a_default_my-bucket": "*",

"com.cloudflare.edge.r2.bucket.4793d734c0b8e484dfc37ec392b5fa8a_eu_my-eu-bucket": "*"

},

"permission_groups": [

{

"id": "6a018a9f2fc74eb6b293b0c548f38b39",

"name": "Workers R2 Storage Bucket Item Read"

}

]

}

]

```

### Get S3 API credentials from an API token

You can get the Access Key ID and Secret Access Key values from the response of the [Create Token](https://developers.cloudflare.com/api/resources/user/subresources/tokens/methods/create/) API:

* Access Key ID: The `id` of the API token.

* Secret Access Key: The SHA-256 hash of the API token `value`.

Refer to [Authenticate against R2 API using auth tokens](https://developers.cloudflare.com/r2/examples/authenticate-r2-auth-tokens/) for a tutorial with JavaScript, Python, and Go examples.

## Temporary access credentials

If you need to create temporary credentials for a bucket or a prefix/object within a bucket, you can use the [temp-access-credentials endpoint](https://developers.cloudflare.com/api/resources/r2/subresources/temporary_credentials/methods/create/) in the API. You will need an existing R2 token to pass in as the parent access key id. You can use the credentials from the API result for an S3-compatible request by setting the credential variables like so:

```plaintext

AWS_ACCESS_KEY_ID =

AWS_SECRET_ACCESS_KEY =

AWS_SESSION_TOKEN =

```

Note

The temporary access key cannot have a permission that is higher than the parent access key. e.g. if the parent key is set to `Object Read Write`, the temporary access key could only have `Object Read Write` or `Object Read Only` permissions.

---

title: Workers API · Cloudflare R2 docs

lastUpdated: 2025-12-29T18:01:22.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/r2/api/workers/

md: https://developers.cloudflare.com/r2/api/workers/index.md

---

* [Workers API reference](https://developers.cloudflare.com/r2/api/workers/workers-api-reference/)

* [Use R2 from Workers](https://developers.cloudflare.com/r2/api/workers/workers-api-usage/)

* [Use the R2 multipart API from Workers](https://developers.cloudflare.com/r2/api/workers/workers-multipart-usage/)

---

title: Connect to Iceberg engines · Cloudflare R2 docs

description: Find detailed setup instructions for Apache Spark and other common

query engines.

lastUpdated: 2025-09-25T04:10:41.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/r2/data-catalog/config-examples/

md: https://developers.cloudflare.com/r2/data-catalog/config-examples/index.md

---

Below are configuration examples to connect various Iceberg engines to [R2 Data Catalog](https://developers.cloudflare.com/r2/data-catalog/):

* [Apache Trino](https://developers.cloudflare.com/r2/data-catalog/config-examples/trino/)

* [DuckDB](https://developers.cloudflare.com/r2/data-catalog/config-examples/duckdb/)

* [PyIceberg](https://developers.cloudflare.com/r2/data-catalog/config-examples/pyiceberg/)

* [Snowflake](https://developers.cloudflare.com/r2/data-catalog/config-examples/snowflake/)

* [Spark (PySpark)](https://developers.cloudflare.com/r2/data-catalog/config-examples/spark-python/)

* [Spark (Scala)](https://developers.cloudflare.com/r2/data-catalog/config-examples/spark-scala/)

* [StarRocks](https://developers.cloudflare.com/r2/data-catalog/config-examples/starrocks/)

---

title: Deleting data · Cloudflare R2 docs

description: How to properly delete data from R2 Data Catalog

lastUpdated: 2026-01-14T21:16:44.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/data-catalog/deleting-data/

md: https://developers.cloudflare.com/r2/data-catalog/deleting-data/index.md

---

Deleting data from R2 Data Catalog or any Apache Iceberg catalog requires that operations are done in a transaction through the catalog itself. Manually deleting metadata or data files directly can lead to data catalog corruption.

## Automatic table maintenance

R2 Data Catalog can automatically manage table maintenance operations such as snapshot expiration and compaction. These continuous operations help keep latency and storage costs down.

* **Snapshot expiration**: Automatically removes old snapshots. This reduces metadata overhead. Data files are not removed until orphan file removal is run.

* **Compaction**: Merges small data files into larger ones. This optimizes read performance and reduces the number of files read during queries.

Without enabling automatic maintenance, you need to manually handle these operations.

Learn more in the [table maintenance](https://developers.cloudflare.com/r2/data-catalog/table-maintenance/) documentation.

## Examples of enabling automatic table maintenance in R2 Data Catalog

```bash

# Enable automatic snapshot expiration for entire catalog

npx wrangler r2 bucket catalog snapshot-expiration enable my-bucket \

--older-than-days 30 \

--retain-last 5

# Enable automatic compaction for entire catalog

npx wrangler r2 bucket catalog compaction enable my-bucket \

--target-size 256

```

Refer to additional examples in the [manage catalogs](https://developers.cloudflare.com/r2/data-catalog/manage-catalogs/) documentation.

## Manually deleting and removing data

You need to manually delete data for:

* Complying with data retention policies such as GDPR or CCPA.

* Selective based deletes using conditional logic.

* Removing stale or unreferenced files that R2 Data Catalog does not manage.

The following are basic examples using PySpark but similar operations can be performed using other Iceberg-compatible engines. To configure PySpark, refer to our [example](https://developers.cloudflare.com/r2/data-catalog/config-examples/spark-python/) or the official [PySpark documentation](https://spark.apache.org/docs/latest/api/python/getting_started/index.html).

### Deleting rows from a table

```py

# Creates new snapshots and marks old files for cleanup

spark.sql("""

DELETE FROM r2dc.namespace.table_name

WHERE column_name = 'value'

""")

# The following is effectively a TRUNCATE operation

spark.sql("DELETE FROM r2dc.namespace.table_name")

# For large deletes, use partitioned tables and delete entire partitions for faster performance:

spark.sql("""

DELETE FROM r2dc.namespace.table_name

WHERE date_partition < '2024-01-01'

""")

```

### Dropping tables and namespaces

```py

# Removes table from catalog but keeps data files in R2 storage

spark.sql("DROP TABLE r2dc.namespace.table_name")

# âš ï¸ DANGER: Permanently deletes all data files from R2

# This operation cannot be undone

spark.sql("DROP TABLE r2dc.namespace.table_name PURGE")

# Use CASCADE to drop all tables within the namespace

spark.sql("DROP NAMESPACE r2dc.namespace_name CASCADE")

# You will need to PURGE the tables before running CASCADE to permanently delete data files

# This can be done with a loop over all tables in the namespace

tables = spark.sql("SHOW TABLES IN r2dc.namespace_name").collect()

for row in tables:

table_name = row['tableName']

spark.sql(f"DROP TABLE r2dc.namespace_name.{table_name} PURGE")

spark.sql("DROP NAMESPACE r2dc.namespace_name CASCADE")

```

Data loss warning

`DROP TABLE ... PURGE` permanently deletes all data files from R2 storage. This operation cannot be undone and bypasses time-travel capabilities.

### Manual maintenance operations

```py

# Remove old metadata and data files marked for deletion

# The following retains the last 5 snapshots and deletes files older than Nov 28, 2024

spark.sql("""

CALL r2dc.system.expire_snapshots(

table => 'r2dc.namespace_name.table_name',

older_than => TIMESTAMP '2024-11-28 00:00:00',

retain_last => 5

)

""")

# Removes unreferenced data files from R2 storage (orphan files)

spark.sql("""

CALL r2dc.system.remove_orphan_files(

table => 'namespace.table_name'

)

""")

# Rewrite data files with a target file size (e.g., 512 MB)

spark.sql("""

CALL r2dc.system.rewrite_data_files(

table => 'r2dc.namespace_name.table_name',

options => map('target-file-size-bytes', '536870912')

)

""")

```

## About Apache Iceberg metadata

Apache Iceberg uses a layered metadata structure to manage table data efficiently. Here are the key components and file structure:

* **metadata.json**: Top-level JSON file pointing to the current snapshot

* **snapshot-**\*: Immutable table state for a given point in time

* **manifest-list-\*.avro**: An Avro file listing all manifest files for a given snapshot

* **manifest-file-\*.avro**: An Avro file tracking data files and their statistics

* **data-\*.parquet**: Parquet files containing actual table data

* **Note**: Unchanged manifest files are reused across snapshots

Warning

Manually modifying or deleting any of these files directly can lead to data catalog corruption.

### What happens during deletion

Apache Iceberg supports two deletion modes: **Copy-on-Write (COW)** and **Merge-on-Read (MOR)**. Both create a new snapshot and mark old files for cleanup, but handle the deletion differently:

| Aspect | Copy-on-Write (COW) | Merge-on-Read (MOR) |

| - | - | - |

| **How deletes work** | Rewrites data files without deleted rows | Creates delete files marking rows to skip |

| **Query performance** | Fast (no merge needed) | Slower (requires read-time merge) |

| **Write performance** | Slower (rewrites data files) | Fast (only writes delete markers) |

| **Storage impact** | Creates new data files immediately | Accumulates delete files over time |

| **Maintenance needs** | Snapshot expiration | Snapshot expiration + compaction (`rewrite_data_files`) |

| **Best for** | Read-heavy workloads | Write-heavy workloads with frequent small mutations |

Important for all deletion modes

* Deleted data is **not immediately removed** from R2 - files are marked for cleanup

* Enable [snapshot expiration](https://developers.cloudflare.com/r2/data-catalog/table-maintenance) in R2 Data Catalog to automatically clean up old snapshots and files

### Common deletion operations

These operations work the same way for both COW and MOR tables:

| Operation | What it does | Data deleted? | Reversible? |

| - | - | - | - |

| `DELETE FROM` | Removes rows matching condition | No (marked for cleanup) | Via time travel[1](#user-content-fn-1) |

| `DROP TABLE` | Removes table from catalog | No | Yes (if data files exist) |

| `DROP TABLE ... PURGE` | Removes table and deletes data | **Yes** | **No** |

| `expire_snapshots` | Cleans up old snapshots/files | **Yes** | **No** |

| `remove_orphan_files` | Removes unreferenced files | **Yes** | **No** |

### MOR-specific operations

For Merge-on-Read tables, you may need to manually apply deletes for performance:

| Operation | What it does | When to use |

| - | - | - |

| `rewrite_data_files` (compaction) | Applies deletes and consolidates files | When query performance degrades due to many delete files |

Note

R2 Data Catalog can automate [rewriting data files](https://developers.cloudflare.com/r2/data-catalog/table-maintenance/) for you.

## Related resources

* [Table maintenance](https://developers.cloudflare.com/r2/data-catalog/table-maintenance) - Learn about automatic maintenance operations

* [R2 Data Catalog](https://developers.cloudflare.com/r2/data-catalog/) - Overview and getting started guide

* [Query data](https://developers.cloudflare.com/r2-sql/query-data) - Query tables with R2 SQL

* [Apache Iceberg Maintenance](https://iceberg.apache.org/docs/latest/maintenance/) - Official Iceberg documentation on table maintenance

## Footnotes

1. Time travel available until `expire_snapshots` is called [↩](#user-content-fnref-1)

---

title: Getting started · Cloudflare R2 docs

description: Learn how to enable the R2 Data Catalog on your bucket, load sample

data, and run your first query.

lastUpdated: 2025-09-25T04:07:16.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/data-catalog/get-started/

md: https://developers.cloudflare.com/r2/data-catalog/get-started/index.md

---

This guide will instruct you through:

* Creating your first [R2 bucket](https://developers.cloudflare.com/r2/buckets/) and enabling its [data catalog](https://developers.cloudflare.com/r2/data-catalog/).

* Creating an [API token](https://developers.cloudflare.com/r2/api/tokens/) needed for query engines to authenticate with your data catalog.

* Using [PyIceberg](https://py.iceberg.apache.org/) to create your first Iceberg table in a [marimo](https://marimo.io/) Python notebook.

* Using [PyIceberg](https://py.iceberg.apache.org/) to load sample data into your table and query it.

## Prerequisites

1. Sign up for a [Cloudflare account](https://dash.cloudflare.com/sign-up/workers-and-pages).

2. Install [`Node.js`](https://docs.npmjs.com/downloading-and-installing-node-js-and-npm).

Node.js version manager

Use a Node version manager like [Volta](https://volta.sh/) or [nvm](https://github.com/nvm-sh/nvm) to avoid permission issues and change Node.js versions. [Wrangler](https://developers.cloudflare.com/workers/wrangler/install-and-update/), discussed later in this guide, requires a Node version of `16.17.0` or later.

## 1. Create an R2 bucket

* Wrangler CLI

1. If not already logged in, run:

```plaintext

npx wrangler login

```

2. Create an R2 bucket:

```plaintext

npx wrangler r2 bucket create r2-data-catalog-tutorial

```

* Dashboard

1. In the Cloudflare dashboard, go to the **R2 object storage** page.

[Go to **Overview**](https://dash.cloudflare.com/?to=/:account/r2/overview)

2. Select **Create bucket**.

3. Enter the bucket name: r2-data-catalog-tutorial

4. Select **Create bucket**.

## 2. Enable the data catalog for your bucket

* Wrangler CLI

Then, enable the catalog on your chosen R2 bucket:

```plaintext

npx wrangler r2 bucket catalog enable r2-data-catalog-tutorial

```

When you run this command, take note of the "Warehouse" and "Catalog URI". You will need these later.

* Dashboard

1. In the Cloudflare dashboard, go to the **R2 object storage** page.

[Go to **Overview**](https://dash.cloudflare.com/?to=/:account/r2/overview)

2. Select the bucket: r2-data-catalog-tutorial.

3. Switch to the **Settings** tab, scroll down to **R2 Data Catalog**, and select **Enable**.

4. Once enabled, note the **Catalog URI** and **Warehouse name**.

## 3. Create an API token

Iceberg clients (including [PyIceberg](https://py.iceberg.apache.org/)) must authenticate to the catalog with an [R2 API token](https://developers.cloudflare.com/r2/api/tokens/) that has both R2 and catalog permissions.

1. In the Cloudflare dashboard, go to the **R2 object storage** page.

[Go to **Overview**](https://dash.cloudflare.com/?to=/:account/r2/overview)

2. Select **Manage API tokens**.

3. Select **Create API token**.

4. Select the **R2 Token** text to edit your API token name.

5. Under **Permissions**, choose the **Admin Read & Write** permission.

6. Select **Create API Token**.

7. Note the **Token value**.

## 4. Install uv

You need to install a Python package manager. In this guide, use [uv](https://docs.astral.sh/uv/). If you do not already have uv installed, follow the [installing uv guide](https://docs.astral.sh/uv/getting-started/installation/).

## 5. Install marimo and set up your project with uv

We will use [marimo](https://github.com/marimo-team/marimo) as a Python notebook.

1. Create a directory where our notebook will be stored:

```plaintext

mkdir r2-data-catalog-notebook

```

2. Change into our new directory:

```plaintext

cd r2-data-catalog-notebook

```

3. Initialize a new uv project (this creates a `.venv` and a `pyproject.toml`):

```plaintext

uv init

```

4. Add marimo and required dependencies:

```py

uv add marimo pyiceberg pyarrow pandas

```

## 6. Create a Python notebook to interact with the data warehouse

1. Create a file called `r2-data-catalog-tutorial.py`.

2. Paste the following code snippet into your `r2-data-catalog-tutorial.py` file:

```py

import marimo

__generated_with = "0.11.31"

app = marimo.App(width="medium")

@app.cell

def _():

import marimo as mo

return (mo,)

@app.cell

def _():

import pandas

import pyarrow as pa

import pyarrow.compute as pc

import pyarrow.parquet as pq

from pyiceberg.catalog.rest import RestCatalog

# Define catalog connection details (replace variables)

WAREHOUSE = ""

TOKEN = ""

CATALOG_URI = ""

# Connect to R2 Data Catalog

catalog = RestCatalog(

name="my_catalog",

warehouse=WAREHOUSE,

uri=CATALOG_URI,

token=TOKEN,

)

return (

CATALOG_URI,

RestCatalog,

TOKEN,

WAREHOUSE,

catalog,

pa,

pandas,

pc,

pq,

)

@app.cell

def _(catalog):

# Create default namespace if needed

catalog.create_namespace_if_not_exists("default")

return

@app.cell

def _(pa):

# Create simple PyArrow table

df = pa.table({

"id": [1, 2, 3],

"name": ["Alice", "Bob", "Charlie"],

"score": [80.0, 92.5, 88.0],

})

return (df,)

@app.cell

def _(catalog, df):

# Create or load Iceberg table

test_table = ("default", "people")

if not catalog.table_exists(test_table):

print(f"Creating table: {test_table}")

table = catalog.create_table(

test_table,

schema=df.schema,

)

else:

table = catalog.load_table(test_table)

return table, test_table

@app.cell

def _(df, table):

# Append data

table.append(df)

return

@app.cell

def _(table):

print("Table contents:")

scanned = table.scan().to_arrow()

print(scanned.to_pandas())

return (scanned,)

@app.cell

def _():

# Optional cleanup. To run uncomment and run cell

# print(f"Deleting table: {test_table}")

# catalog.drop_table(test_table)

# print("Table dropped.")

return

if __name__ == "__main__":

app.run()

```

3. Replace the `CATALOG_URI`, `WAREHOUSE`, and `TOKEN` variables with your values from sections **2** and **3** respectively.

4. Launch the notebook editor in your browser:

```plaintext

uv run marimo edit r2-data-catalog-tutorial.py

```

Once your notebook connects to the catalog, you'll see the catalog along with its namespaces and tables appear in marimo's Datasources panel.

In the Python notebook above, you:

1. Connect to your catalog.

2. Create the `default` namespace.

3. Create a simple PyArrow table.

4. Create (or load) the `people` table in the `default` namespace.

5. Append sample data to the table.

6. Print the contents of the table.

7. (Optional) Drop the `people` table we created for this tutorial.

## Learn more

[Managing catalogs ](https://developers.cloudflare.com/r2/data-catalog/manage-catalogs/)Enable or disable R2 Data Catalog on your bucket, retrieve configuration details, and authenticate your Iceberg engine.

[Connect to Iceberg engines ](https://developers.cloudflare.com/r2/data-catalog/config-examples/)Find detailed setup instructions for Apache Spark and other common query engines.

---

title: Manage catalogs · Cloudflare R2 docs

description: Understand how to manage Iceberg REST catalogs associated with R2 buckets

lastUpdated: 2026-02-06T15:42:11.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/r2/data-catalog/manage-catalogs/

md: https://developers.cloudflare.com/r2/data-catalog/manage-catalogs/index.md

---

Learn how to:

* Enable and disable [R2 Data Catalog](https://developers.cloudflare.com/r2/data-catalog/) on your buckets.

* Enable and disable [table maintenance](https://developers.cloudflare.com/r2/data-catalog/table-maintenance/) features like compaction and snapshot expiration.

* Authenticate Iceberg engines using API tokens.

## Enable R2 Data Catalog on a bucket

Enabling the catalog on a bucket turns on the REST catalog interface and provides a **Catalog URI** and **Warehouse name** required by Iceberg clients. Once enabled, you can create and manage Iceberg tables in that bucket.

* Dashboard

1. In the Cloudflare dashboard, go to the **R2 object storage** page.

[Go to **Overview**](https://dash.cloudflare.com/?to=/:account/r2/overview)

2. Select the bucket you want to enable as a data catalog.

3. Switch to the **Settings** tab, scroll down to **R2 Data Catalog**, and select **Enable**.

4. Once enabled, note the **Catalog URI** and **Warehouse name**.

* Wrangler CLI